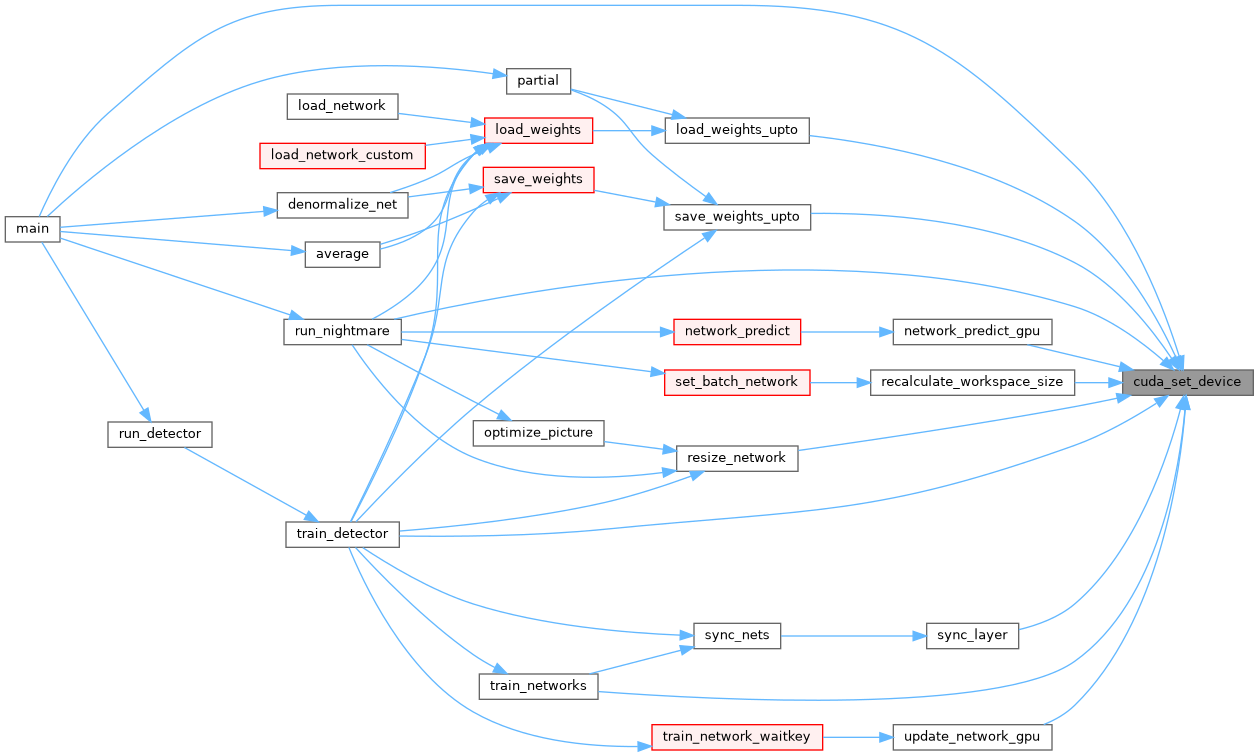

Functions | |

| void | cuda_set_device (int n) |

This is part of the original C API. This function does nothing when Darknet was built to run on the CPU. | |

Variables | |

| int | cuda_debug_sync = 0 |

| void cuda_set_device | ( | int | n | ) |

This is part of the original C API. This function does nothing when Darknet was built to run on the CPU.

| int cuda_debug_sync = 0 |