Macros | |

| #define | CUDA_KERNEL_LOOP(i, n) |

| #define | swap(a0, a1, j, m) t = (a0 ^ (a1 >>j)) & m; a0 = a0 ^ t; a1 = a1 ^ (t << j); |

Functions | |

| template<typename T > | |

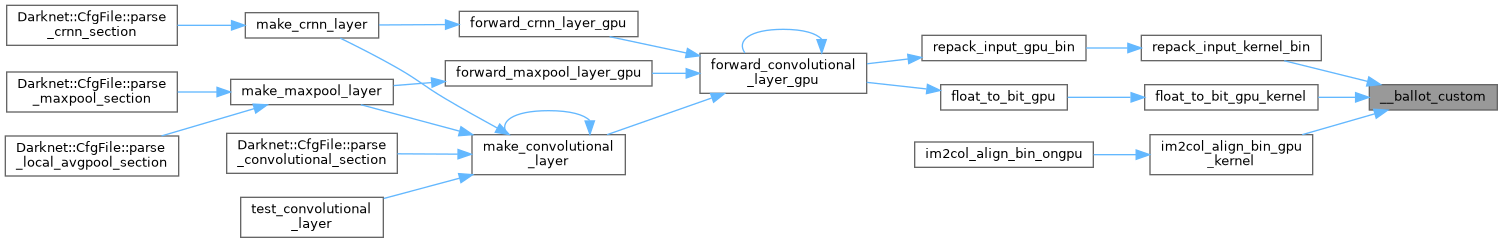

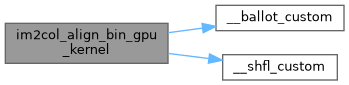

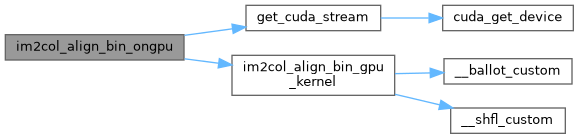

| __device__ uint32_t | __ballot_custom (T val) |

| template<typename T1 , typename T2 > | |

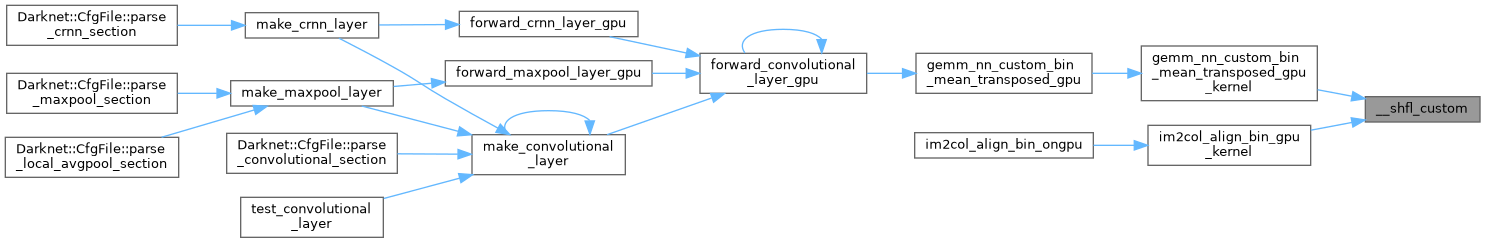

| __device__ T1 | __shfl_custom (T1 val, T2 lane) |

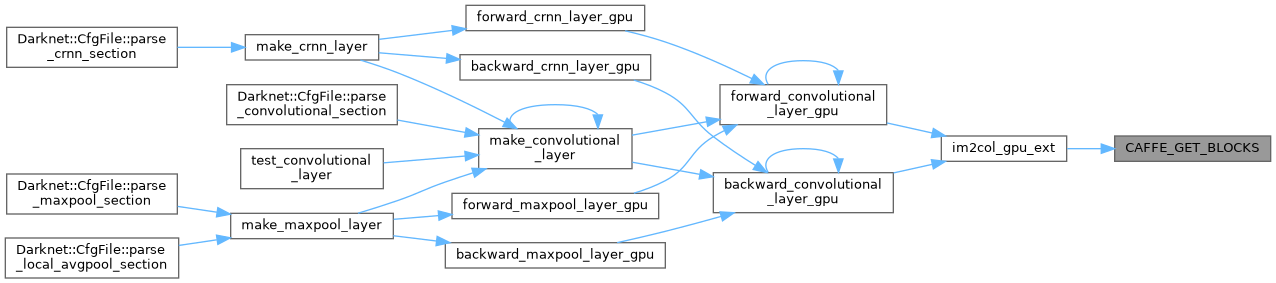

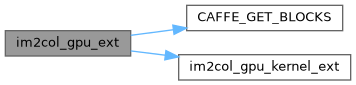

| int | CAFFE_GET_BLOCKS (const int N) |

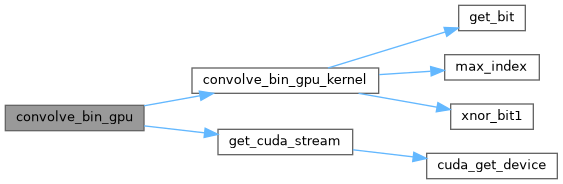

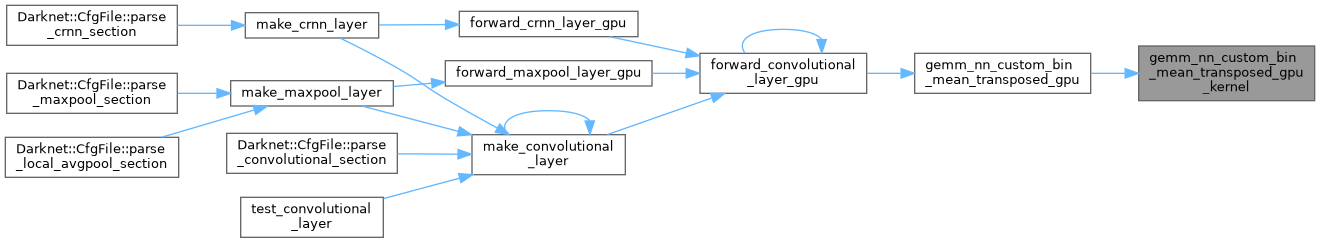

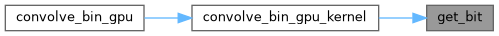

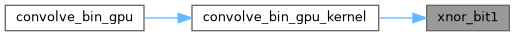

| void | convolve_bin_gpu (float *input, float *weights, float *output, int in_w, int in_h, int in_c, int n, int size, int pad, int new_lda, float *mean_arr_gpu) |

| __global__ void | convolve_bin_gpu_kernel (float *input, float *weights, float *output, int in_w, int in_h, int in_c, int n, int size, int pad, int new_lda, float *mean_arr_gpu) |

| void | convolve_gpu (float *input, float *weights, float *output, int in_w, int in_h, int in_c, int n, int size, int pad) |

| __global__ void | convolve_gpu_kernel (float *input, float *weights, float *output, int in_w, int in_h, int in_c, int n, int size, int pad) |

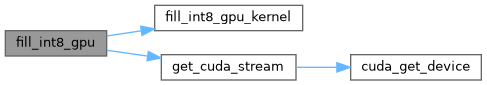

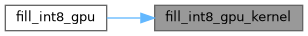

| void | fill_int8_gpu (unsigned char *src, unsigned char val, size_t size) |

| __global__ void | fill_int8_gpu_kernel (unsigned char *src, unsigned char val, size_t size) |

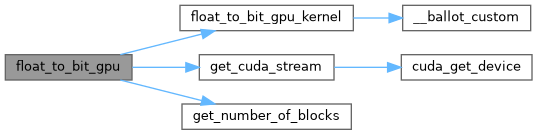

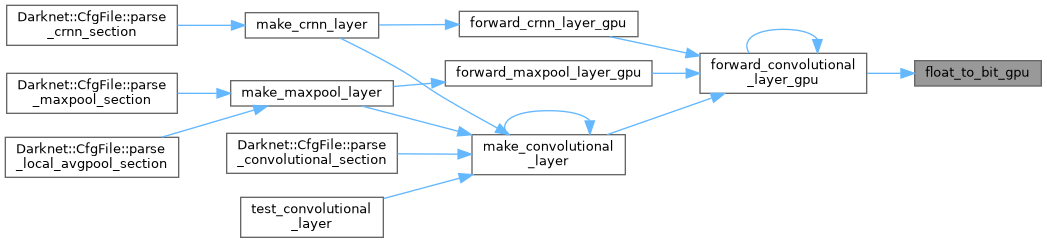

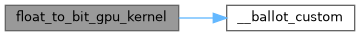

| void | float_to_bit_gpu (float *src, unsigned char *dst, size_t size) |

| __global__ void | float_to_bit_gpu_kernel (float *src, unsigned char *dst, size_t size) |

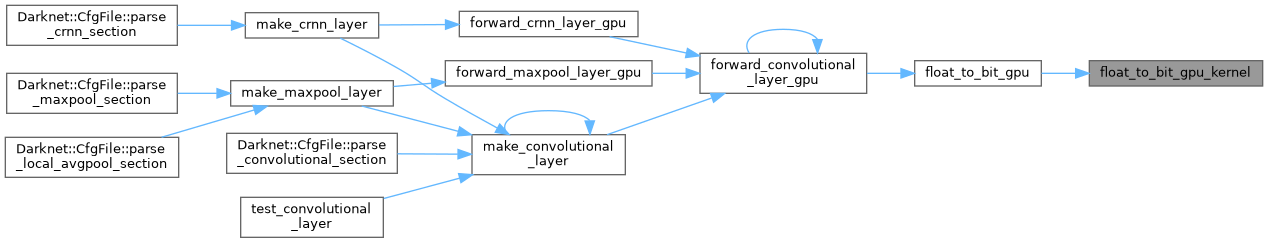

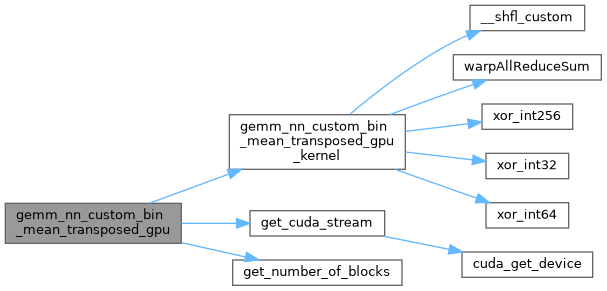

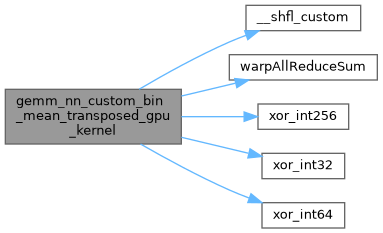

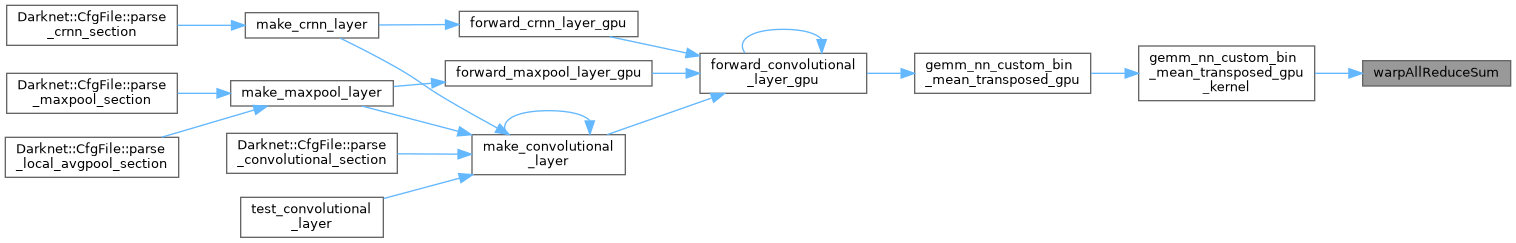

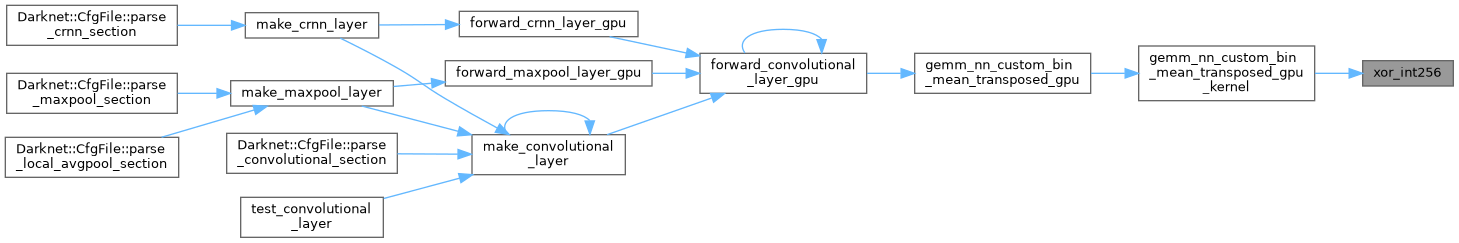

| void | gemm_nn_custom_bin_mean_transposed_gpu (int M, int N, int K, unsigned char *A, int lda, unsigned char *B, int ldb, float *C, int ldc, float *mean_arr, float *bias, int leaky_activation, float *shortcut_in_gpu, float *shortcut_out_gpu) |

| __global__ void | gemm_nn_custom_bin_mean_transposed_gpu_kernel (int M, int N, int K, unsigned char *A, int lda, unsigned char *B, int ldb, float *C, int ldc, float *mean_arr, float *bias_arr, int leaky_activation, float *shortcut_in_gpu, float *shortcut_out_gpu) |

| __device__ static __host__ unsigned char | get_bit (unsigned char const *const src, size_t index) |

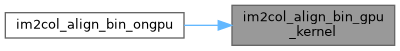

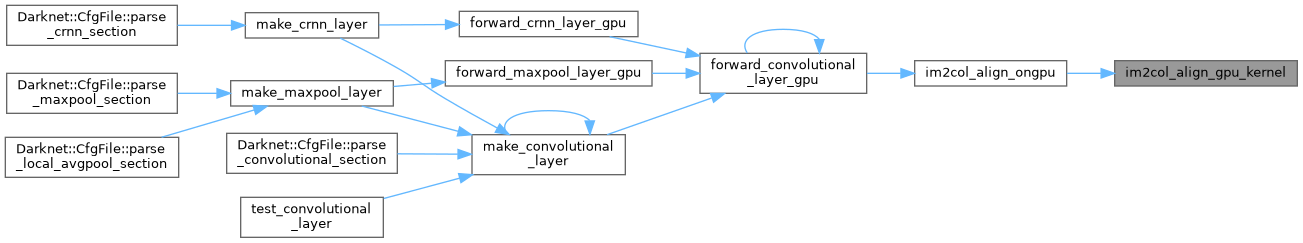

| __global__ void | im2col_align_bin_gpu_kernel (const int n, const float *data_im, const int height, const int width, const int ksize, const int channels, const int pad, const int stride, const int height_col, const int width_col, float *data_col, const int bit_align) |

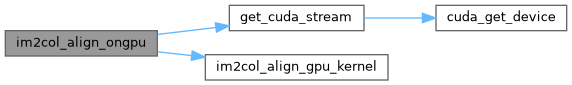

| void | im2col_align_bin_ongpu (float *im, int channels, int height, int width, int ksize, int stride, int pad, float *data_col, int bit_align) |

| __global__ void | im2col_align_gpu_kernel (const int n, const float *data_im, const int height, const int width, const int ksize, const int pad, const int stride, const int height_col, const int width_col, float *data_col, const int bit_align) |

| void | im2col_align_ongpu (float *im, int channels, int height, int width, int ksize, int stride, int pad, float *data_col, int bit_align) |

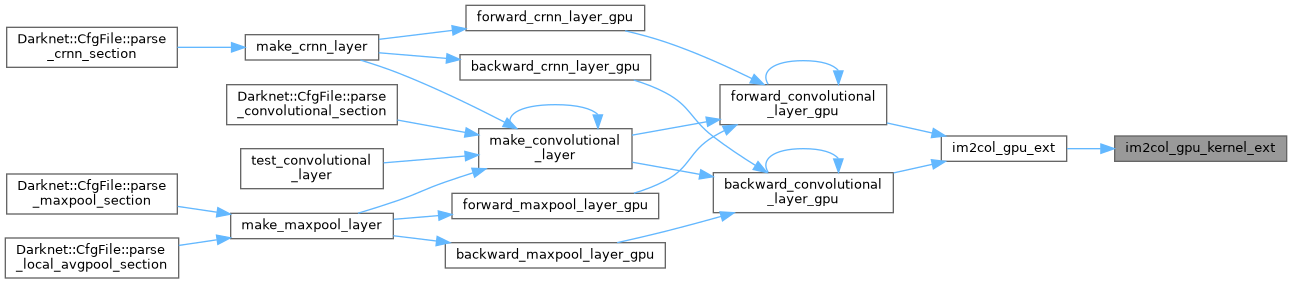

| void | im2col_gpu_ext (const float *data_im, const int channels, const int height, const int width, const int kernel_h, const int kernel_w, const int pad_h, const int pad_w, const int stride_h, const int stride_w, const int dilation_h, const int dilation_w, float *data_col) |

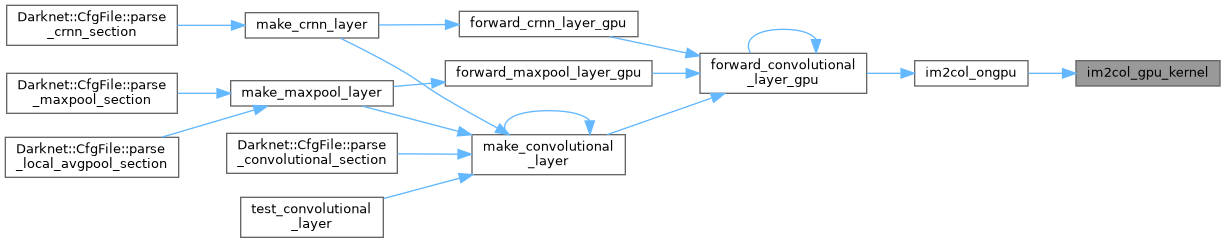

| __global__ void | im2col_gpu_kernel (const int n, const float *data_im, const int height, const int width, const int ksize, const int pad, const int stride, const int height_col, const int width_col, float *data_col) |

| __global__ void | im2col_gpu_kernel_ext (const int n, const float *data_im, const int height, const int width, const int kernel_h, const int kernel_w, const int pad_h, const int pad_w, const int stride_h, const int stride_w, const int dilation_h, const int dilation_w, const int height_col, const int width_col, float *data_col) |

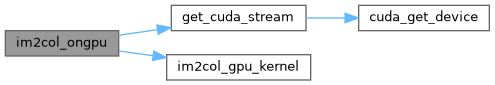

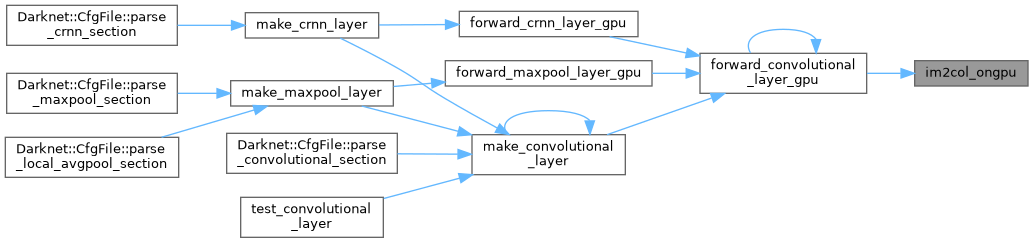

| void | im2col_ongpu (float *im, int channels, int height, int width, int ksize, int stride, int pad, float *data_col) |

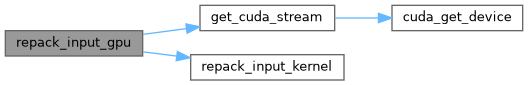

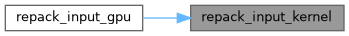

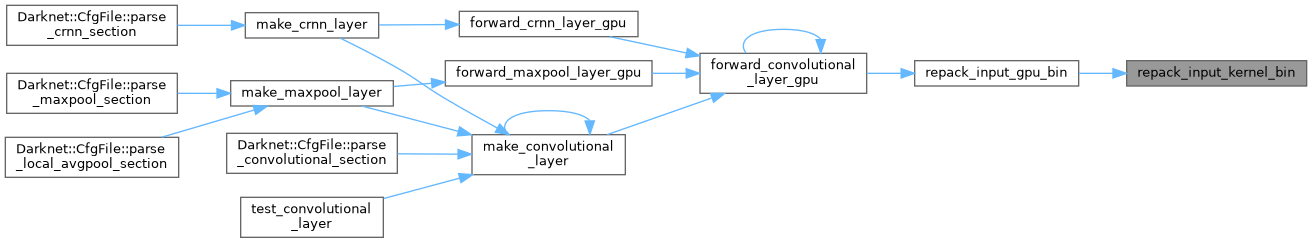

| void | repack_input_gpu (float *input, float *re_packed_input, int w, int h, int c) |

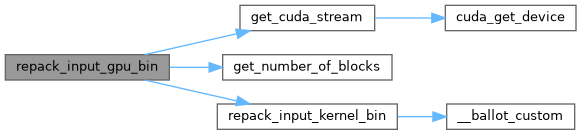

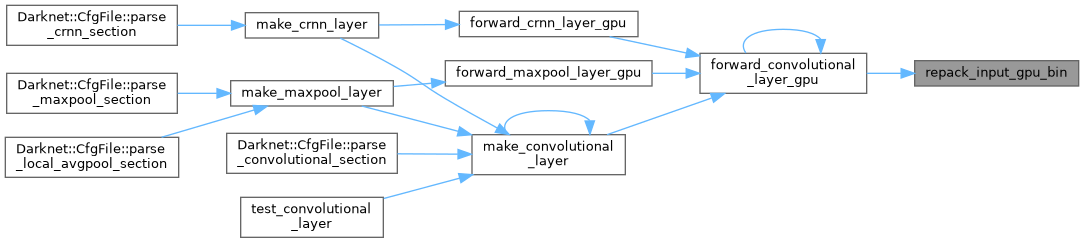

| void | repack_input_gpu_bin (float *input, uint32_t *re_packed_input_bin, int w, int h, int c) |

| __global__ void | repack_input_kernel (float *input, float *re_packed_input, int w, int h, int c) |

| __global__ void | repack_input_kernel_2 (float *input, float *re_packed_input, int w, int h, int c) |

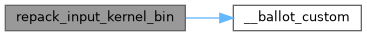

| __global__ void | repack_input_kernel_bin (float *input, uint32_t *re_packed_input_bin, int w, int h, int c) |

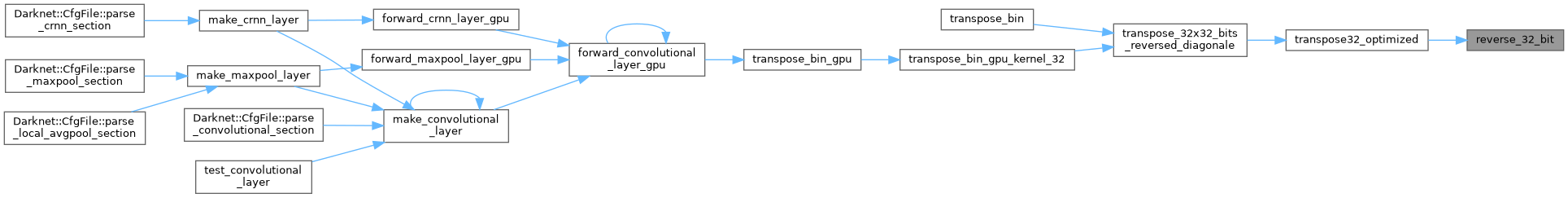

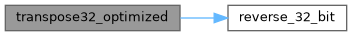

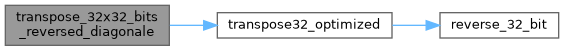

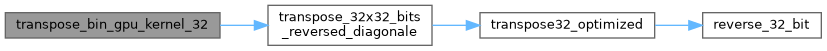

| __device__ uint32_t | reverse_32_bit (uint32_t a) |

| __device__ __host__ uint8_t | reverse_8_bit (uint8_t a) |

| __device__ __host__ unsigned char | reverse_byte (unsigned char a) |

| __device__ __host__ unsigned char | reverse_byte_2 (unsigned char a) |

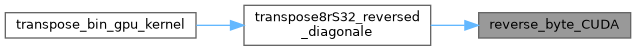

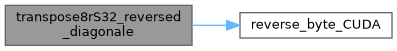

| __device__ unsigned char | reverse_byte_CUDA (unsigned char a) |

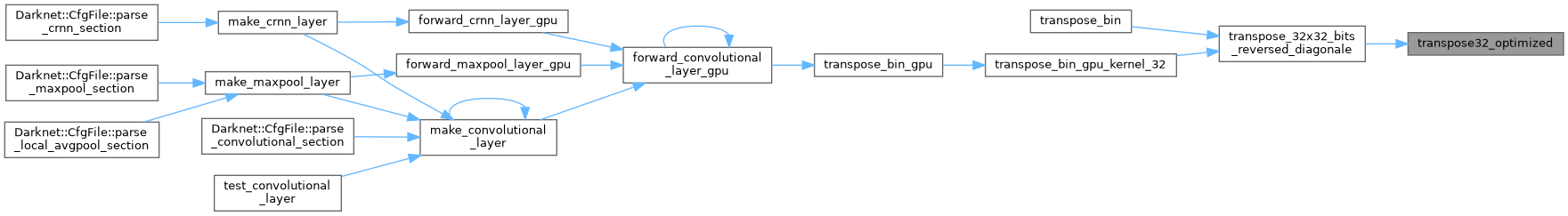

| __device__ void | transpose32_optimized (uint32_t A[32]) |

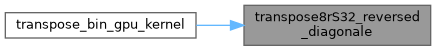

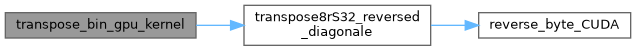

| __device__ void | transpose8rS32_reversed_diagonale (unsigned char *A, unsigned char *B, int m, int n) |

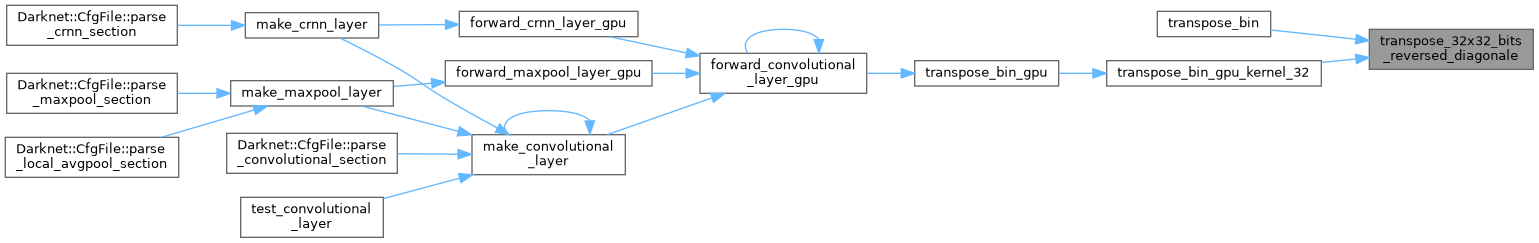

| __device__ void | transpose_32x32_bits_reversed_diagonale (uint32_t *A, uint32_t *B, int m, int n) |

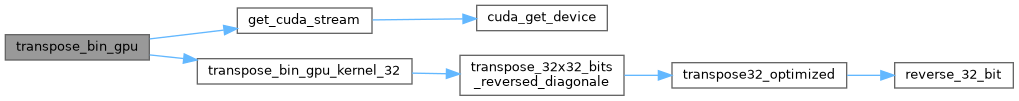

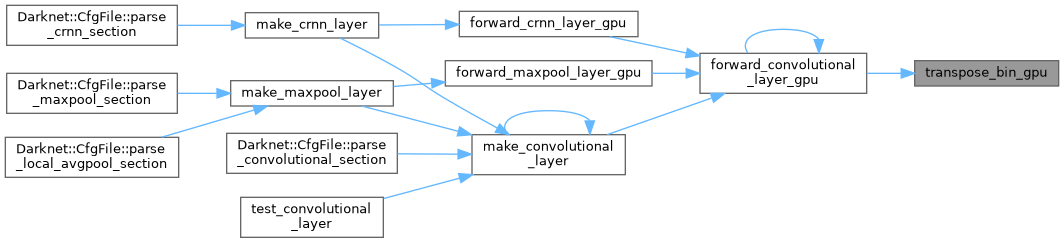

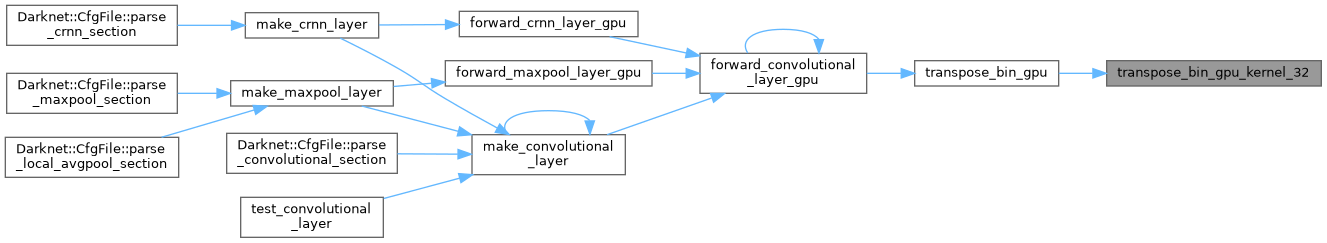

| void | transpose_bin_gpu (unsigned char *A, unsigned char *B, const int n, const int m, const int lda, const int ldb, const int block_size) |

| __global__ void | transpose_bin_gpu_kernel (unsigned char *A, unsigned char *B, const int n, const int m, const int lda, const int ldb, const int block_size) |

| __global__ void | transpose_bin_gpu_kernel_32 (uint32_t *A, uint32_t *B, const int n, const int m, const int lda, const int ldb, const int block_size) |

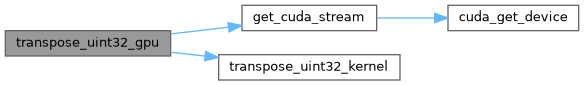

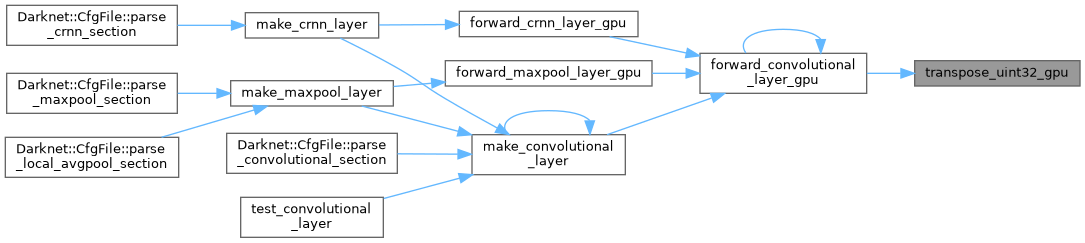

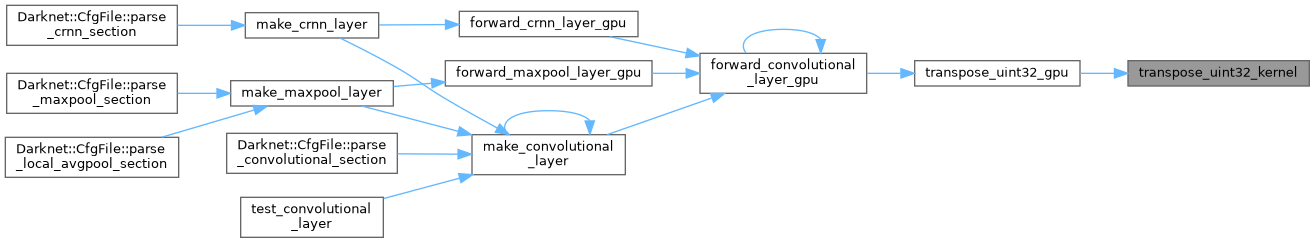

| void | transpose_uint32_gpu (uint32_t *src, uint32_t *dst, int src_h, int src_w, int src_align, int dst_align) |

| __global__ void | transpose_uint32_kernel (uint32_t *src, uint32_t *dst, int src_h, int src_w, int src_align, int dst_align) |

| __global__ void | transpose_uint32_kernel_2 (uint32_t *src, uint32_t *dst, int src_h, int src_w, int src_align, int dst_align) |

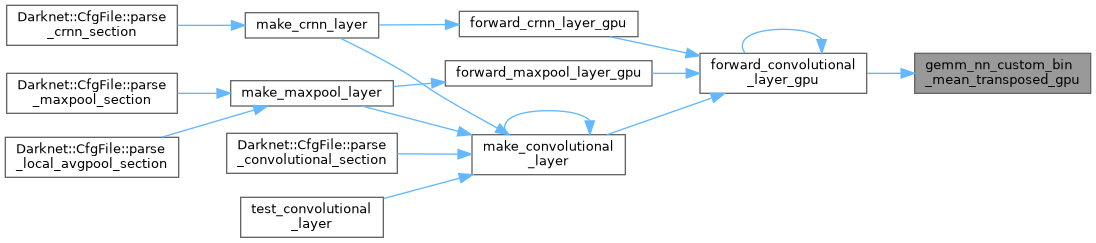

| __inline__ __device__ int | warpAllReduceSum (int val) |

| __device__ static __host__ uint8_t | xnor_bit1 (uint8_t a, uint8_t b) |

| __device__ static __host__ ulonglong4 | xor_int256 (ulonglong4 a, ulonglong4 b) |

| __device__ static __host__ uint32_t | xor_int32 (uint32_t a, uint32_t b) |

| __device__ static __host__ uint64_t | xor_int64 (uint64_t a, uint64_t b) |

Variables | |

| const int | CAFFE_CUDA_NUM_THREADS = 512 |

| #define CUDA_KERNEL_LOOP | ( | i, | |

| n | |||

| ) |

| #define swap | ( | a0, | |

| a1, | |||

| j, | |||

| m | |||

| ) | t = (a0 ^ (a1 >>j)) & m; a0 = a0 ^ t; a1 = a1 ^ (t << j); |

|

inline |

|

inline |

|

inline |

| void convolve_bin_gpu | ( | float * | input, |

| float * | weights, | ||

| float * | output, | ||

| int | in_w, | ||

| int | in_h, | ||

| int | in_c, | ||

| int | n, | ||

| int | size, | ||

| int | pad, | ||

| int | new_lda, | ||

| float * | mean_arr_gpu | ||

| ) |

| __global__ void convolve_bin_gpu_kernel | ( | float * | input, |

| float * | weights, | ||

| float * | output, | ||

| int | in_w, | ||

| int | in_h, | ||

| int | in_c, | ||

| int | n, | ||

| int | size, | ||

| int | pad, | ||

| int | new_lda, | ||

| float * | mean_arr_gpu | ||

| ) |

| void convolve_gpu | ( | float * | input, |

| float * | weights, | ||

| float * | output, | ||

| int | in_w, | ||

| int | in_h, | ||

| int | in_c, | ||

| int | n, | ||

| int | size, | ||

| int | pad | ||

| ) |

| __global__ void convolve_gpu_kernel | ( | float * | input, |

| float * | weights, | ||

| float * | output, | ||

| int | in_w, | ||

| int | in_h, | ||

| int | in_c, | ||

| int | n, | ||

| int | size, | ||

| int | pad | ||

| ) |

| void fill_int8_gpu | ( | unsigned char * | src, |

| unsigned char | val, | ||

| size_t | size | ||

| ) |

| __global__ void fill_int8_gpu_kernel | ( | unsigned char * | src, |

| unsigned char | val, | ||

| size_t | size | ||

| ) |

| void float_to_bit_gpu | ( | float * | src, |

| unsigned char * | dst, | ||

| size_t | size | ||

| ) |

| __global__ void float_to_bit_gpu_kernel | ( | float * | src, |

| unsigned char * | dst, | ||

| size_t | size | ||

| ) |

| void gemm_nn_custom_bin_mean_transposed_gpu | ( | int | M, |

| int | N, | ||

| int | K, | ||

| unsigned char * | A, | ||

| int | lda, | ||

| unsigned char * | B, | ||

| int | ldb, | ||

| float * | C, | ||

| int | ldc, | ||

| float * | mean_arr, | ||

| float * | bias, | ||

| int | leaky_activation, | ||

| float * | shortcut_in_gpu, | ||

| float * | shortcut_out_gpu | ||

| ) |

| __global__ void gemm_nn_custom_bin_mean_transposed_gpu_kernel | ( | int | M, |

| int | N, | ||

| int | K, | ||

| unsigned char * | A, | ||

| int | lda, | ||

| unsigned char * | B, | ||

| int | ldb, | ||

| float * | C, | ||

| int | ldc, | ||

| float * | mean_arr, | ||

| float * | bias_arr, | ||

| int | leaky_activation, | ||

| float * | shortcut_in_gpu, | ||

| float * | shortcut_out_gpu | ||

| ) |

|

inlinestatic |

| __global__ void im2col_align_bin_gpu_kernel | ( | const int | n, |

| const float * | data_im, | ||

| const int | height, | ||

| const int | width, | ||

| const int | ksize, | ||

| const int | channels, | ||

| const int | pad, | ||

| const int | stride, | ||

| const int | height_col, | ||

| const int | width_col, | ||

| float * | data_col, | ||

| const int | bit_align | ||

| ) |

| void im2col_align_bin_ongpu | ( | float * | im, |

| int | channels, | ||

| int | height, | ||

| int | width, | ||

| int | ksize, | ||

| int | stride, | ||

| int | pad, | ||

| float * | data_col, | ||

| int | bit_align | ||

| ) |

| __global__ void im2col_align_gpu_kernel | ( | const int | n, |

| const float * | data_im, | ||

| const int | height, | ||

| const int | width, | ||

| const int | ksize, | ||

| const int | pad, | ||

| const int | stride, | ||

| const int | height_col, | ||

| const int | width_col, | ||

| float * | data_col, | ||

| const int | bit_align | ||

| ) |

| void im2col_align_ongpu | ( | float * | im, |

| int | channels, | ||

| int | height, | ||

| int | width, | ||

| int | ksize, | ||

| int | stride, | ||

| int | pad, | ||

| float * | data_col, | ||

| int | bit_align | ||

| ) |

| void im2col_gpu_ext | ( | const float * | data_im, |

| const int | channels, | ||

| const int | height, | ||

| const int | width, | ||

| const int | kernel_h, | ||

| const int | kernel_w, | ||

| const int | pad_h, | ||

| const int | pad_w, | ||

| const int | stride_h, | ||

| const int | stride_w, | ||

| const int | dilation_h, | ||

| const int | dilation_w, | ||

| float * | data_col | ||

| ) |

| __global__ void im2col_gpu_kernel | ( | const int | n, |

| const float * | data_im, | ||

| const int | height, | ||

| const int | width, | ||

| const int | ksize, | ||

| const int | pad, | ||

| const int | stride, | ||

| const int | height_col, | ||

| const int | width_col, | ||

| float * | data_col | ||

| ) |

| __global__ void im2col_gpu_kernel_ext | ( | const int | n, |

| const float * | data_im, | ||

| const int | height, | ||

| const int | width, | ||

| const int | kernel_h, | ||

| const int | kernel_w, | ||

| const int | pad_h, | ||

| const int | pad_w, | ||

| const int | stride_h, | ||

| const int | stride_w, | ||

| const int | dilation_h, | ||

| const int | dilation_w, | ||

| const int | height_col, | ||

| const int | width_col, | ||

| float * | data_col | ||

| ) |

| void im2col_ongpu | ( | float * | im, |

| int | channels, | ||

| int | height, | ||

| int | width, | ||

| int | ksize, | ||

| int | stride, | ||

| int | pad, | ||

| float * | data_col | ||

| ) |

| void repack_input_gpu | ( | float * | input, |

| float * | re_packed_input, | ||

| int | w, | ||

| int | h, | ||

| int | c | ||

| ) |

| void repack_input_gpu_bin | ( | float * | input, |

| uint32_t * | re_packed_input_bin, | ||

| int | w, | ||

| int | h, | ||

| int | c | ||

| ) |

| __global__ void repack_input_kernel | ( | float * | input, |

| float * | re_packed_input, | ||

| int | w, | ||

| int | h, | ||

| int | c | ||

| ) |

| __global__ void repack_input_kernel_2 | ( | float * | input, |

| float * | re_packed_input, | ||

| int | w, | ||

| int | h, | ||

| int | c | ||

| ) |

| __global__ void repack_input_kernel_bin | ( | float * | input, |

| uint32_t * | re_packed_input_bin, | ||

| int | w, | ||

| int | h, | ||

| int | c | ||

| ) |

| __device__ uint32_t reverse_32_bit | ( | uint32_t | a | ) |

| __device__ __host__ uint8_t reverse_8_bit | ( | uint8_t | a | ) |

| __device__ __host__ unsigned char reverse_byte | ( | unsigned char | a | ) |

| __device__ __host__ unsigned char reverse_byte_2 | ( | unsigned char | a | ) |

| __device__ unsigned char reverse_byte_CUDA | ( | unsigned char | a | ) |

| __device__ void transpose32_optimized | ( | uint32_t | A[32] | ) |

| __device__ void transpose8rS32_reversed_diagonale | ( | unsigned char * | A, |

| unsigned char * | B, | ||

| int | m, | ||

| int | n | ||

| ) |

| __device__ void transpose_32x32_bits_reversed_diagonale | ( | uint32_t * | A, |

| uint32_t * | B, | ||

| int | m, | ||

| int | n | ||

| ) |

| void transpose_bin_gpu | ( | unsigned char * | A, |

| unsigned char * | B, | ||

| const int | n, | ||

| const int | m, | ||

| const int | lda, | ||

| const int | ldb, | ||

| const int | block_size | ||

| ) |

| __global__ void transpose_bin_gpu_kernel | ( | unsigned char * | A, |

| unsigned char * | B, | ||

| const int | n, | ||

| const int | m, | ||

| const int | lda, | ||

| const int | ldb, | ||

| const int | block_size | ||

| ) |

| __global__ void transpose_bin_gpu_kernel_32 | ( | uint32_t * | A, |

| uint32_t * | B, | ||

| const int | n, | ||

| const int | m, | ||

| const int | lda, | ||

| const int | ldb, | ||

| const int | block_size | ||

| ) |

| void transpose_uint32_gpu | ( | uint32_t * | src, |

| uint32_t * | dst, | ||

| int | src_h, | ||

| int | src_w, | ||

| int | src_align, | ||

| int | dst_align | ||

| ) |

| __global__ void transpose_uint32_kernel | ( | uint32_t * | src, |

| uint32_t * | dst, | ||

| int | src_h, | ||

| int | src_w, | ||

| int | src_align, | ||

| int | dst_align | ||

| ) |

| __global__ void transpose_uint32_kernel_2 | ( | uint32_t * | src, |

| uint32_t * | dst, | ||

| int | src_h, | ||

| int | src_w, | ||

| int | src_align, | ||

| int | dst_align | ||

| ) |

| __inline__ __device__ int warpAllReduceSum | ( | int | val | ) |

|

inlinestatic |

|

inlinestatic |

|

inlinestatic |

|

inlinestatic |

| const int CAFFE_CUDA_NUM_THREADS = 512 |