Functions | |

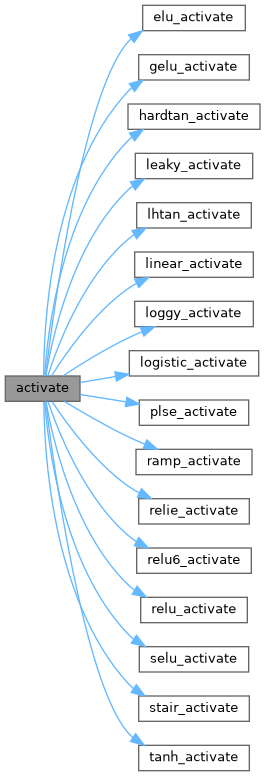

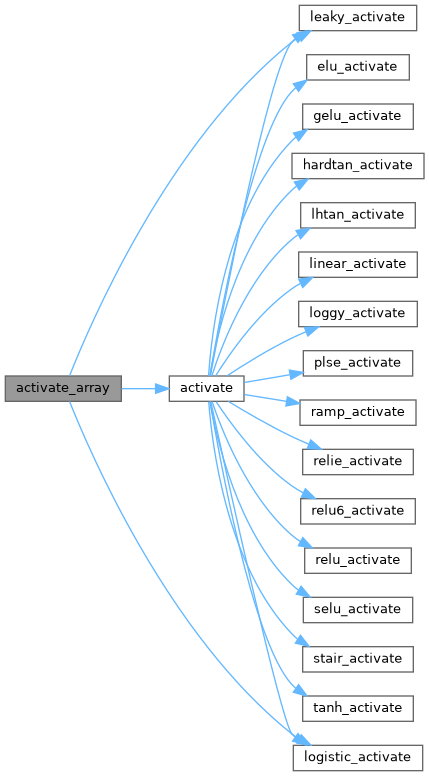

| float | activate (float x, ACTIVATION a) |

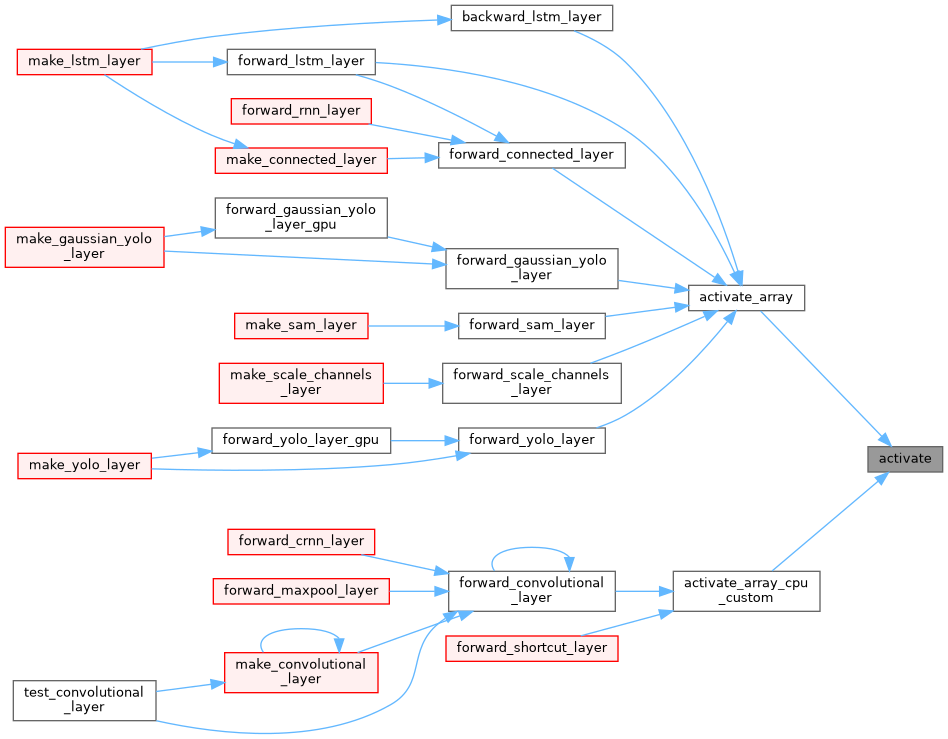

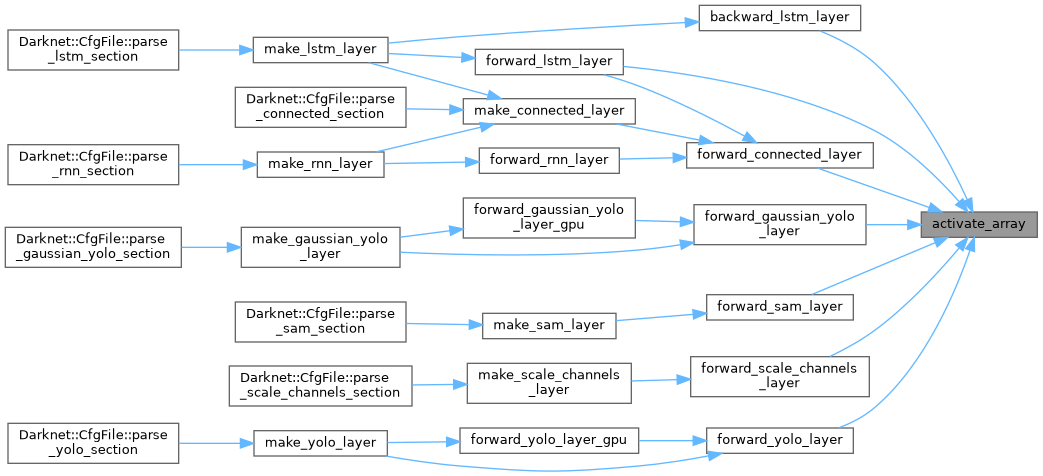

| void | activate_array (float *x, const int n, const ACTIVATION a) |

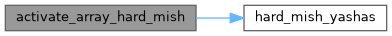

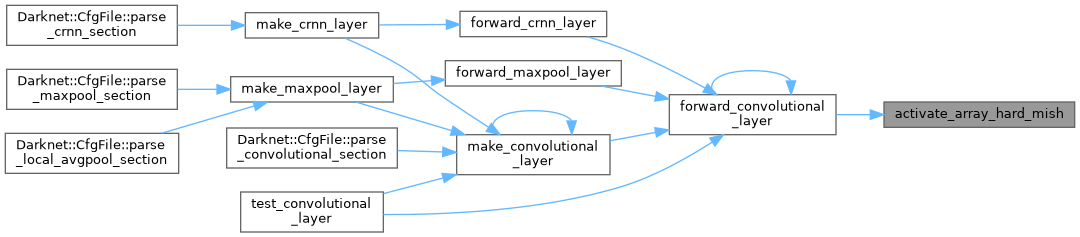

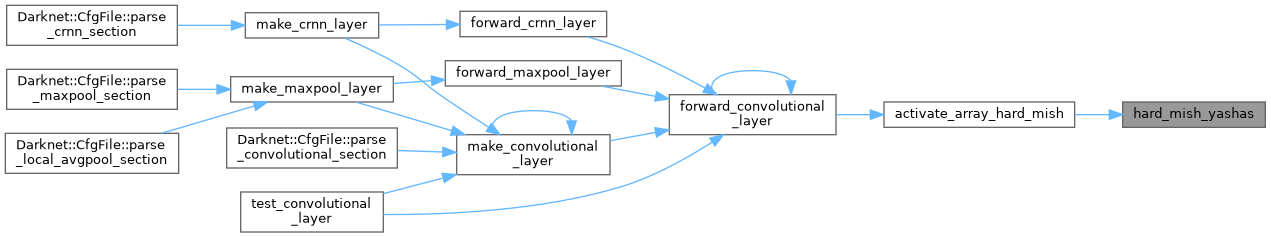

| void | activate_array_hard_mish (float *x, const int n, float *activation_input, float *output) |

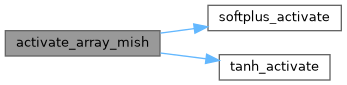

| void | activate_array_mish (float *x, const int n, float *activation_input, float *output) |

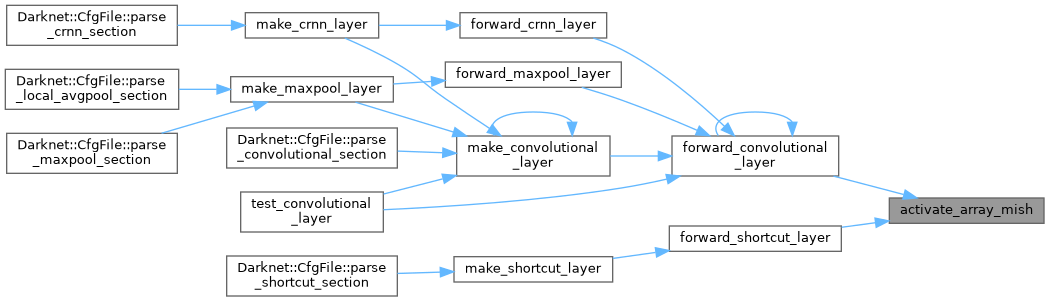

| void | activate_array_normalize_channels (float *x, const int n, int batch, int channels, int wh_step, float *output) |

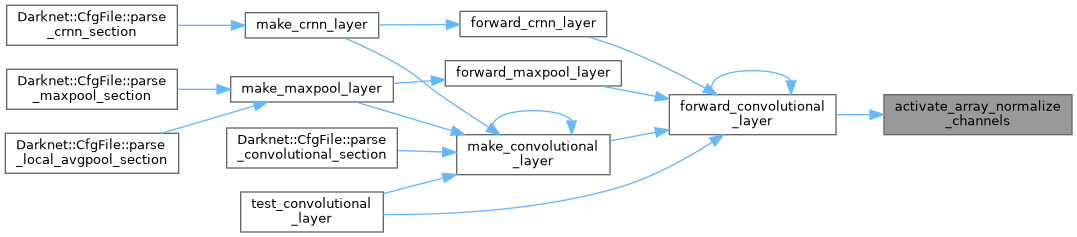

| void | activate_array_normalize_channels_softmax (float *x, const int n, int batch, int channels, int wh_step, float *output, int use_max_val) |

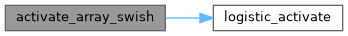

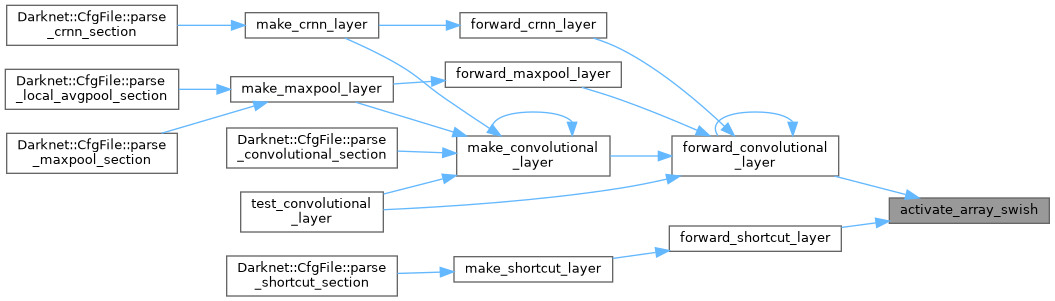

| void | activate_array_swish (float *x, const int n, float *output_sigmoid, float *output) |

| ACTIVATION | get_activation (char *s) |

| const char * | get_activation_string (ACTIVATION a) |

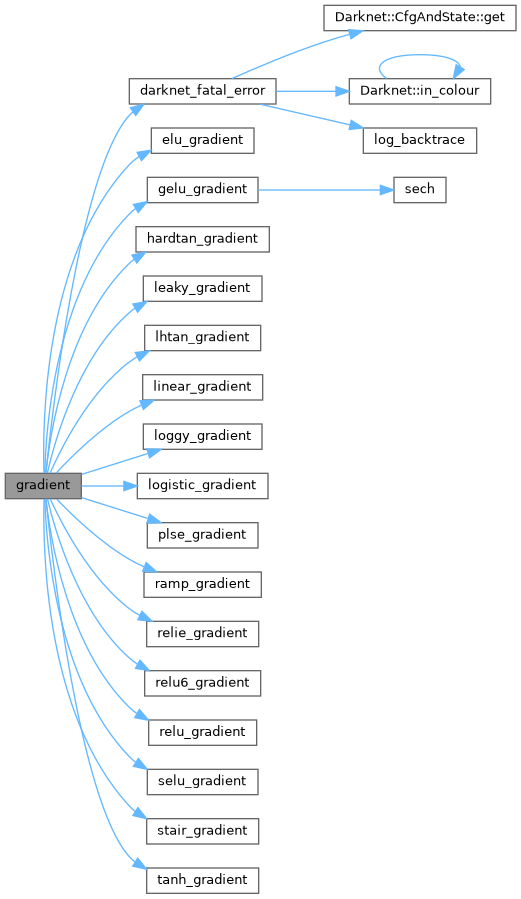

| float | gradient (float x, ACTIVATION a) |

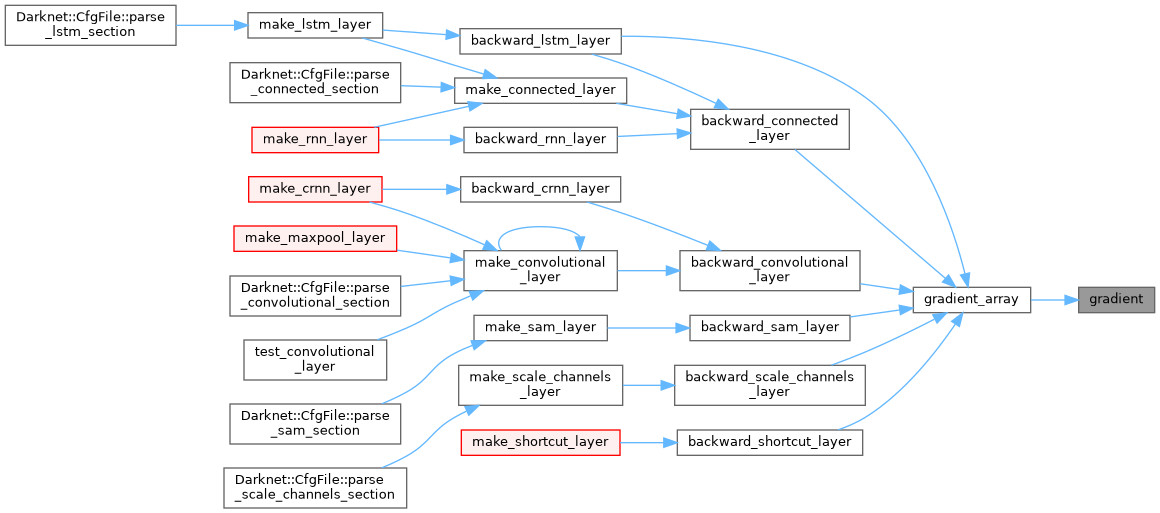

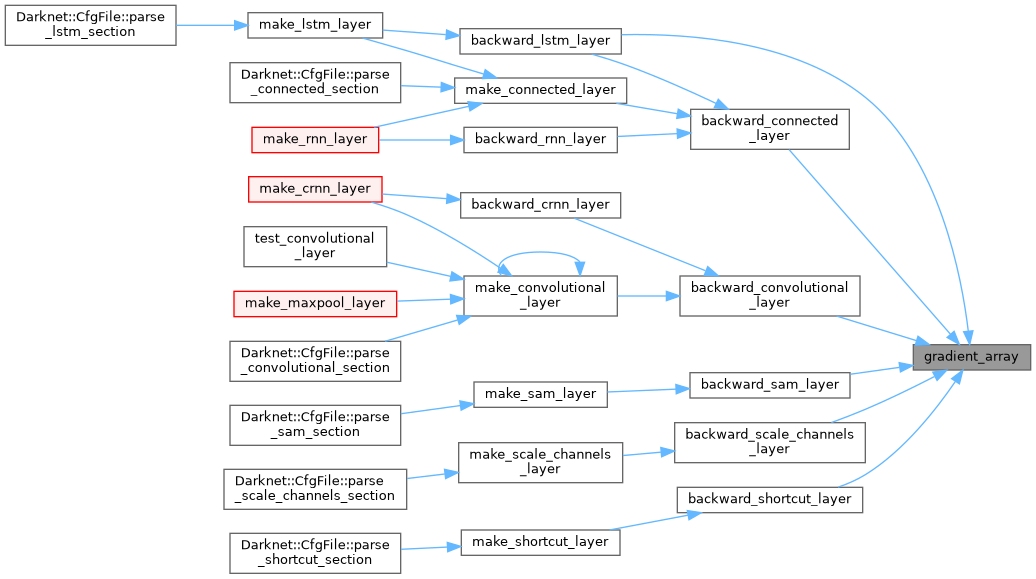

| void | gradient_array (const float *x, const int n, const ACTIVATION a, float *delta) |

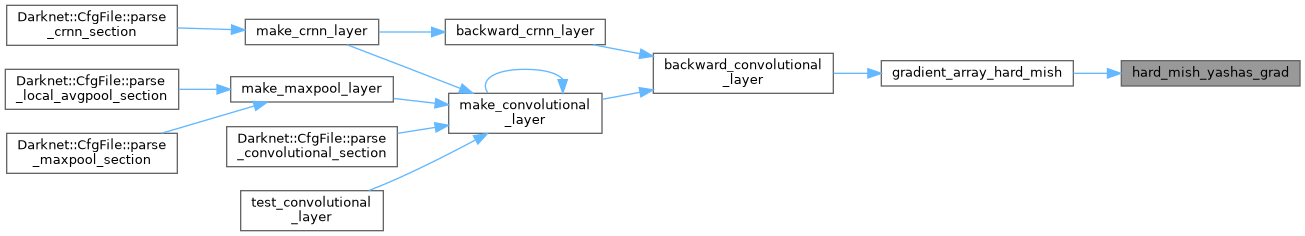

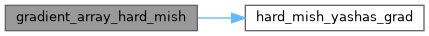

| void | gradient_array_hard_mish (const int n, const float *activation_input, float *delta) |

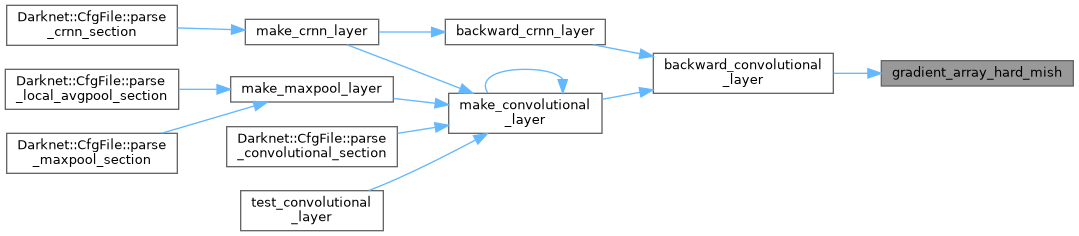

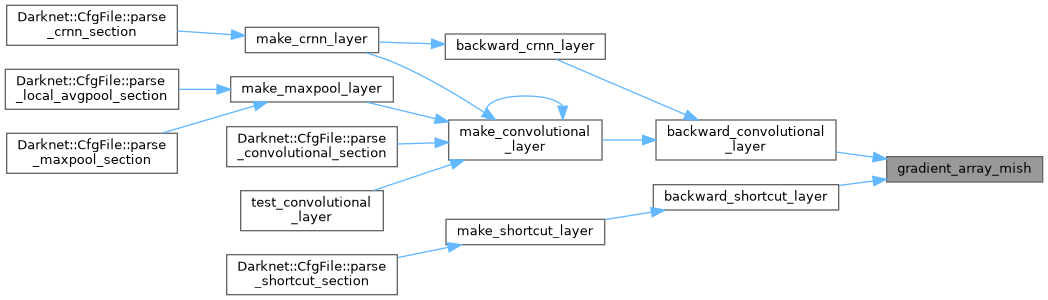

| void | gradient_array_mish (const int n, const float *activation_input, float *delta) |

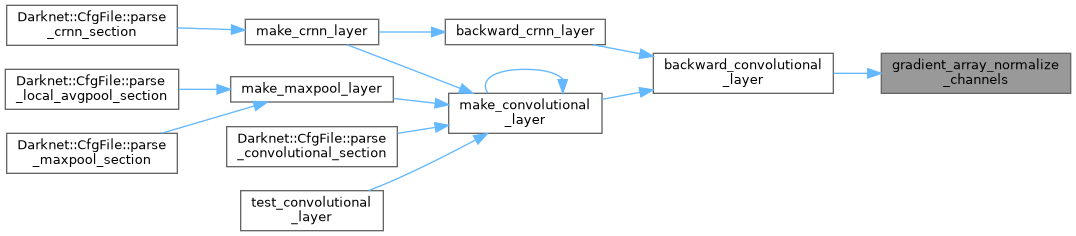

| void | gradient_array_normalize_channels (float *x, const int n, int batch, int channels, int wh_step, float *delta) |

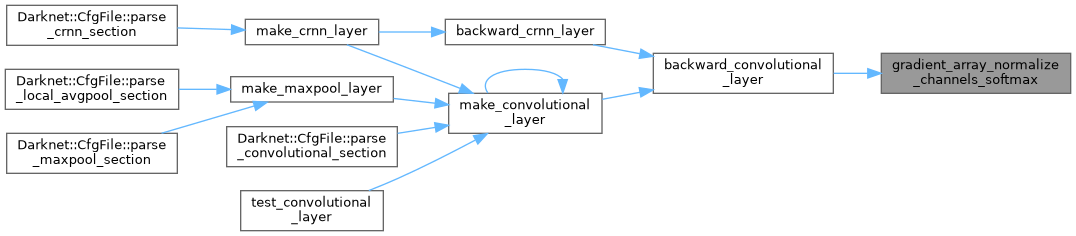

| void | gradient_array_normalize_channels_softmax (float *x, const int n, int batch, int channels, int wh_step, float *delta) |

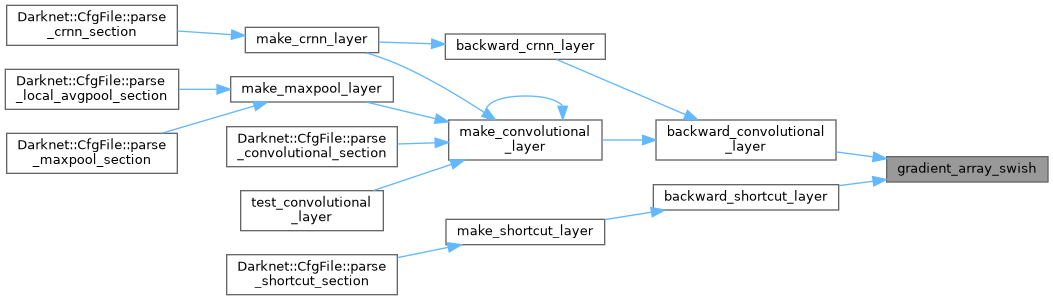

| void | gradient_array_swish (const float *x, const int n, const float *sigmoid, float *delta) |

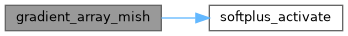

| static float | hard_mish_yashas (float x) |

| static float | hard_mish_yashas_grad (float x) |

| float activate | ( | float | x, |

| ACTIVATION | a | ||

| ) |

| void activate_array | ( | float * | x, |

| const int | n, | ||

| const ACTIVATION | a | ||

| ) |

| void activate_array_hard_mish | ( | float * | x, |

| const int | n, | ||

| float * | activation_input, | ||

| float * | output | ||

| ) |

| void activate_array_mish | ( | float * | x, |

| const int | n, | ||

| float * | activation_input, | ||

| float * | output | ||

| ) |

| void activate_array_normalize_channels | ( | float * | x, |

| const int | n, | ||

| int | batch, | ||

| int | channels, | ||

| int | wh_step, | ||

| float * | output | ||

| ) |

| void activate_array_normalize_channels_softmax | ( | float * | x, |

| const int | n, | ||

| int | batch, | ||

| int | channels, | ||

| int | wh_step, | ||

| float * | output, | ||

| int | use_max_val | ||

| ) |

| void activate_array_swish | ( | float * | x, |

| const int | n, | ||

| float * | output_sigmoid, | ||

| float * | output | ||

| ) |

| ACTIVATION get_activation | ( | char * | s | ) |

| const char * get_activation_string | ( | ACTIVATION | a | ) |

| float gradient | ( | float | x, |

| ACTIVATION | a | ||

| ) |

| void gradient_array | ( | const float * | x, |

| const int | n, | ||

| const ACTIVATION | a, | ||

| float * | delta | ||

| ) |

| void gradient_array_hard_mish | ( | const int | n, |

| const float * | activation_input, | ||

| float * | delta | ||

| ) |

| void gradient_array_mish | ( | const int | n, |

| const float * | activation_input, | ||

| float * | delta | ||

| ) |

| void gradient_array_normalize_channels | ( | float * | x, |

| const int | n, | ||

| int | batch, | ||

| int | channels, | ||

| int | wh_step, | ||

| float * | delta | ||

| ) |

| void gradient_array_normalize_channels_softmax | ( | float * | x, |

| const int | n, | ||

| int | batch, | ||

| int | channels, | ||

| int | wh_step, | ||

| float * | delta | ||

| ) |

| void gradient_array_swish | ( | const float * | x, |

| const int | n, | ||

| const float * | sigmoid, | ||

| float * | delta | ||

| ) |

|

static |

|

static |