|

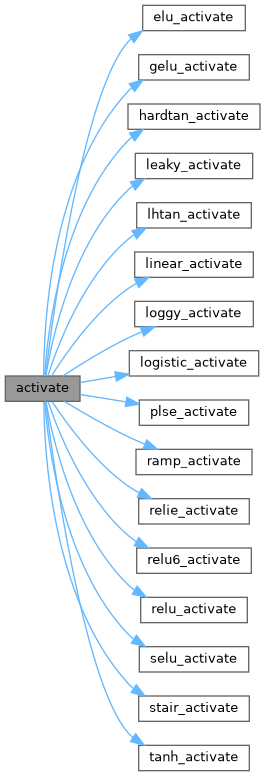

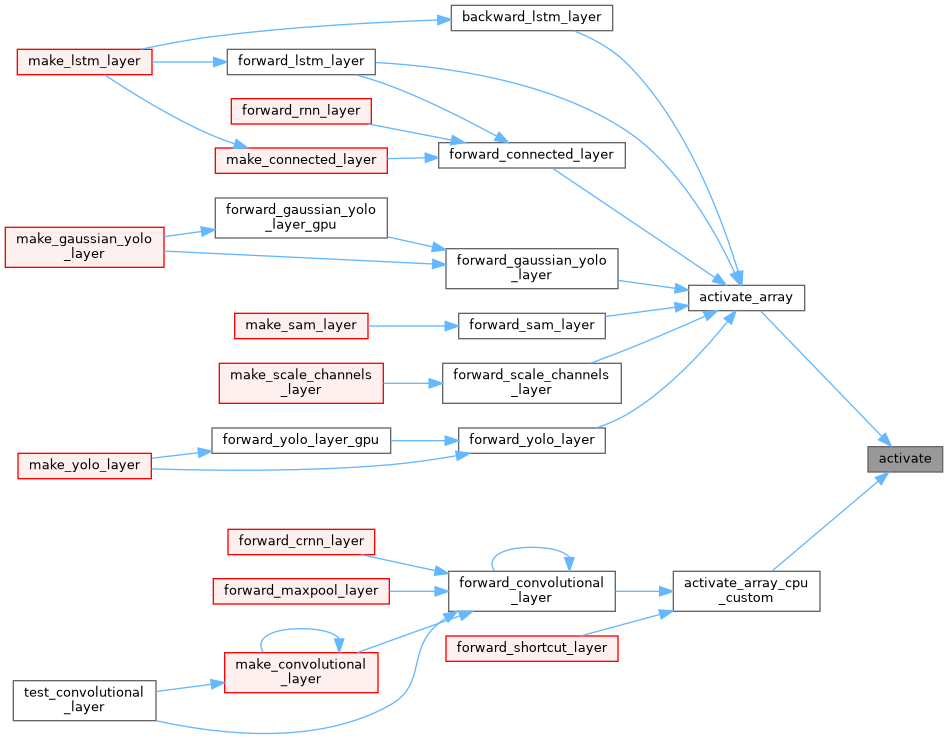

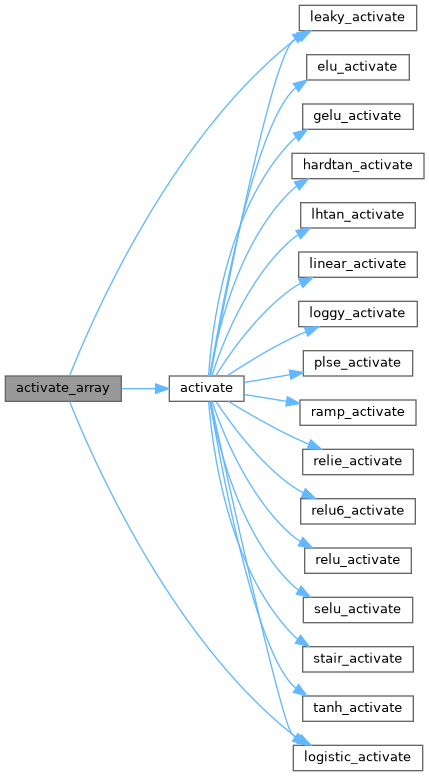

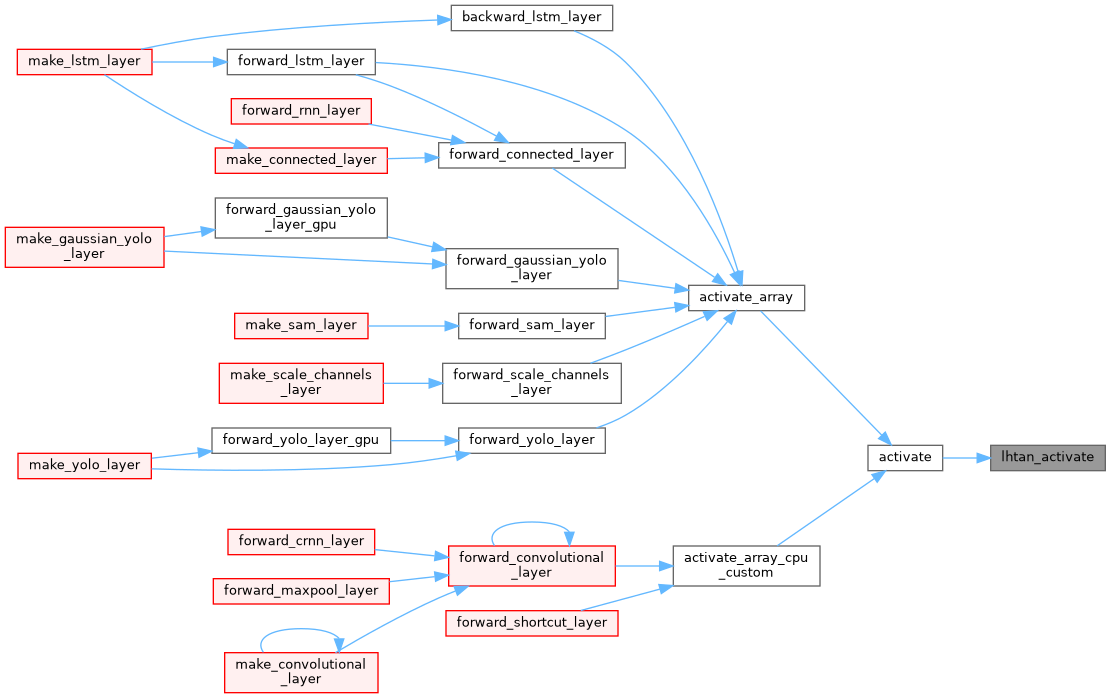

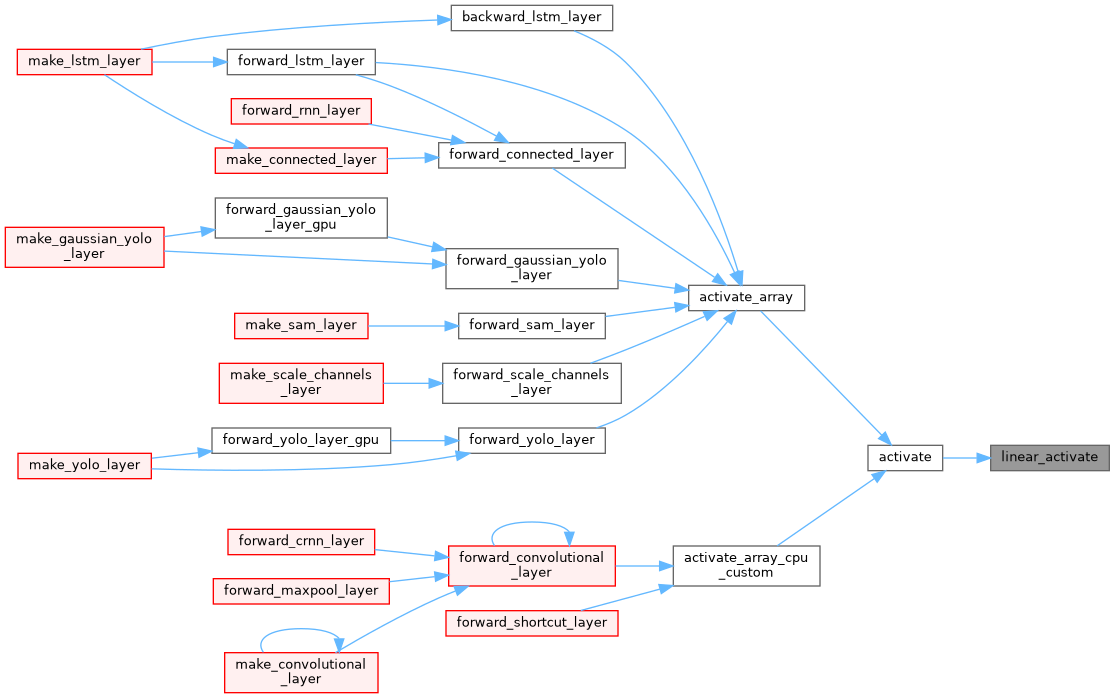

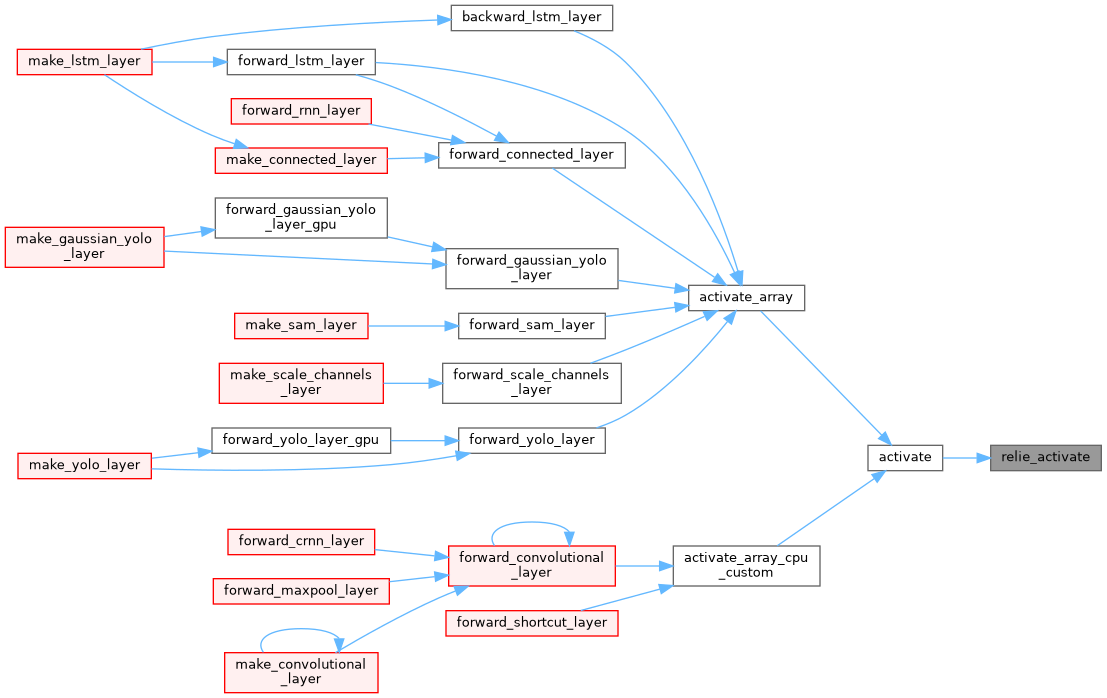

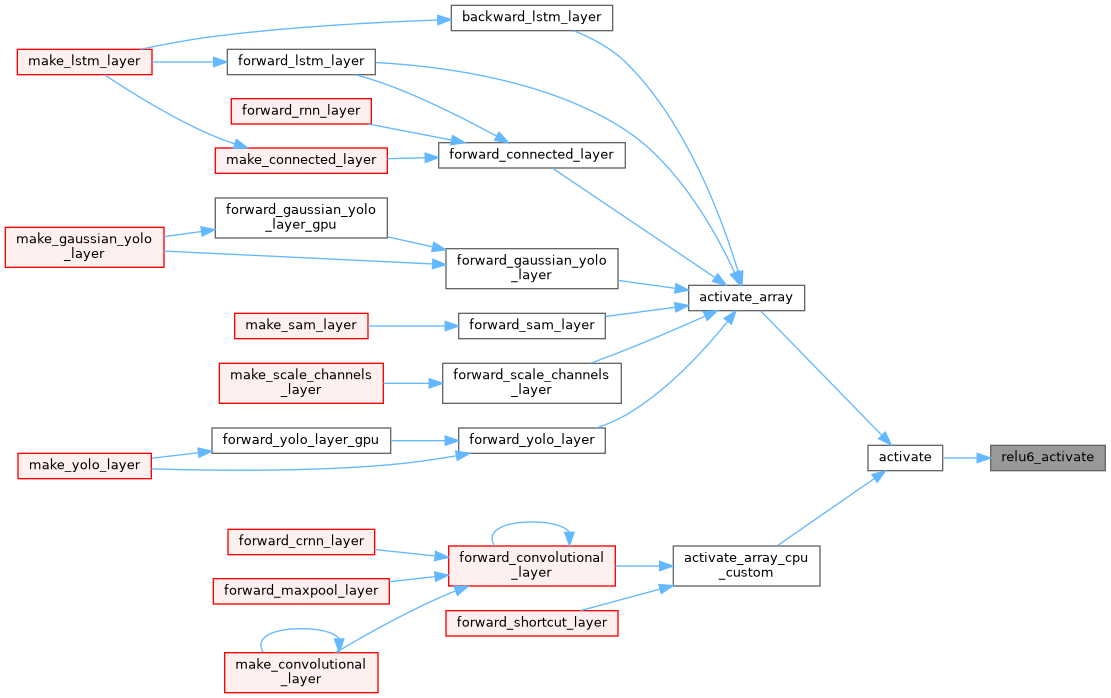

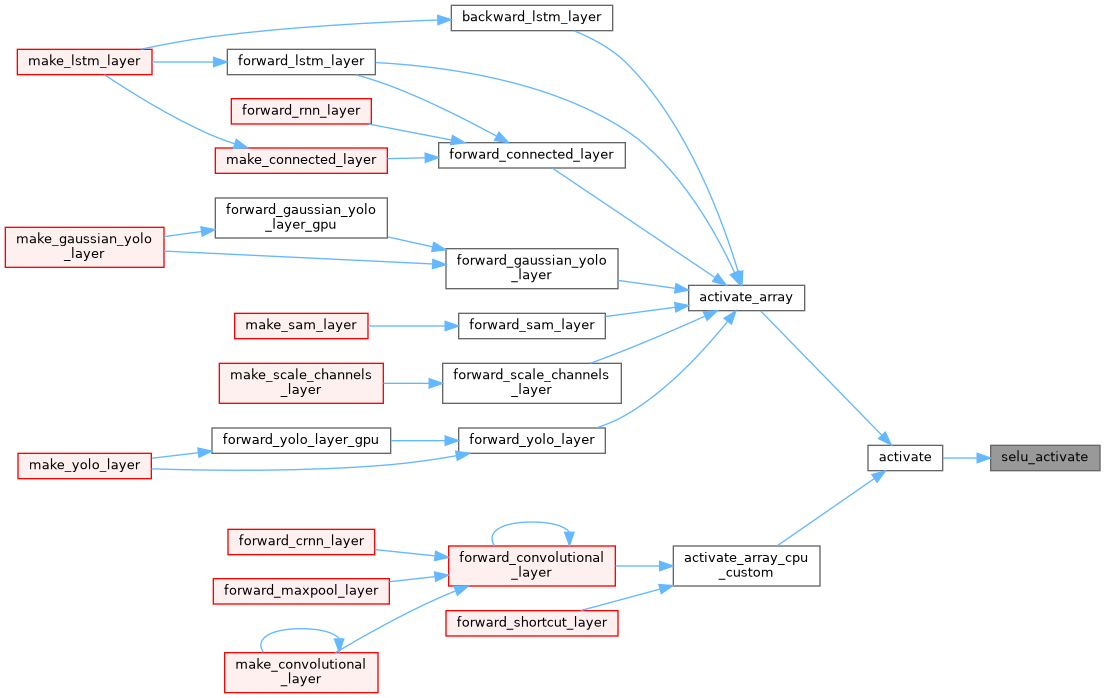

| float | activate (float x, ACTIVATION a) |

| |

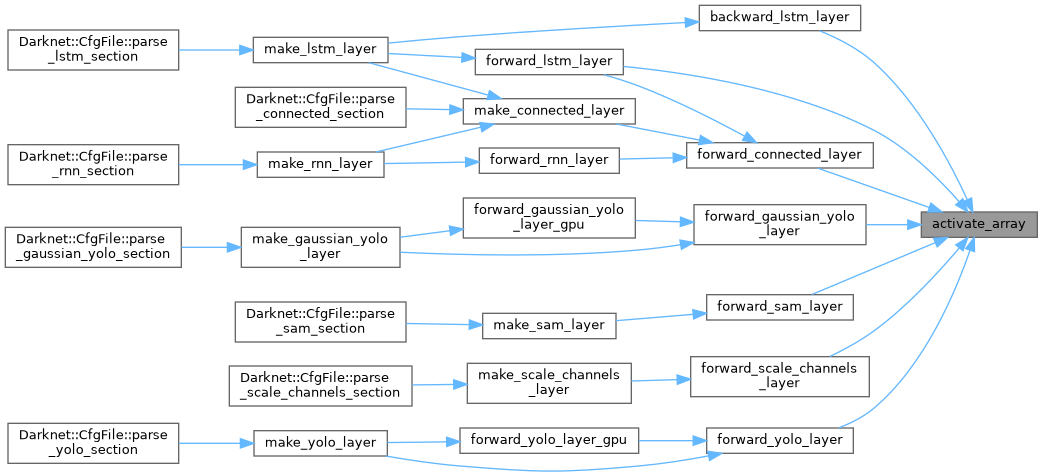

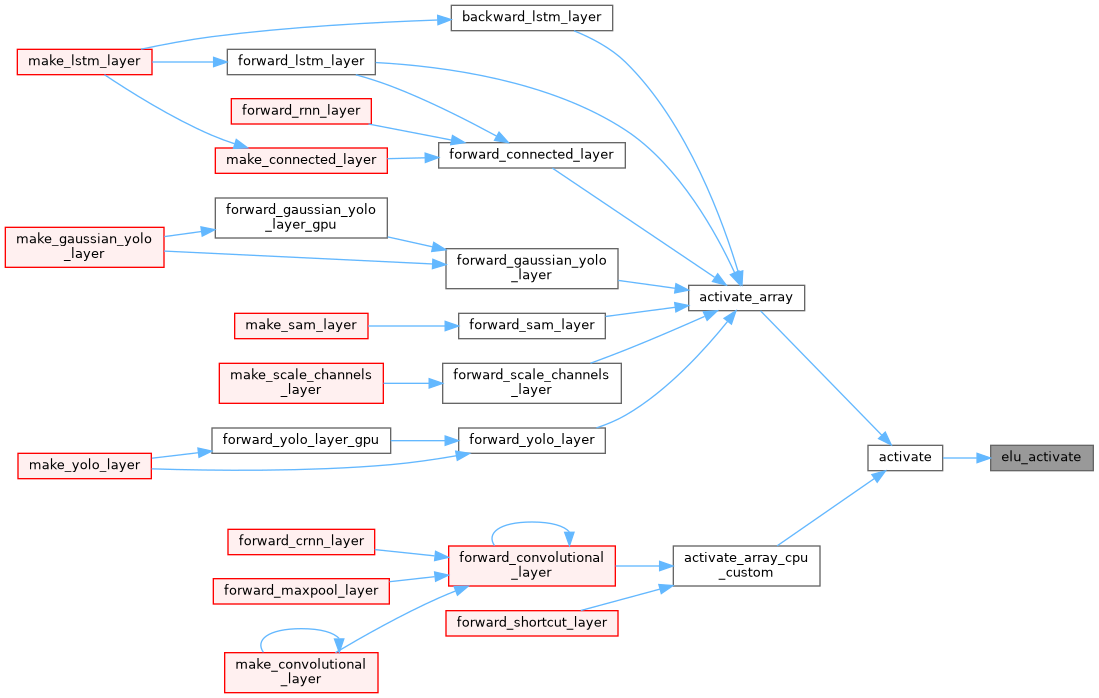

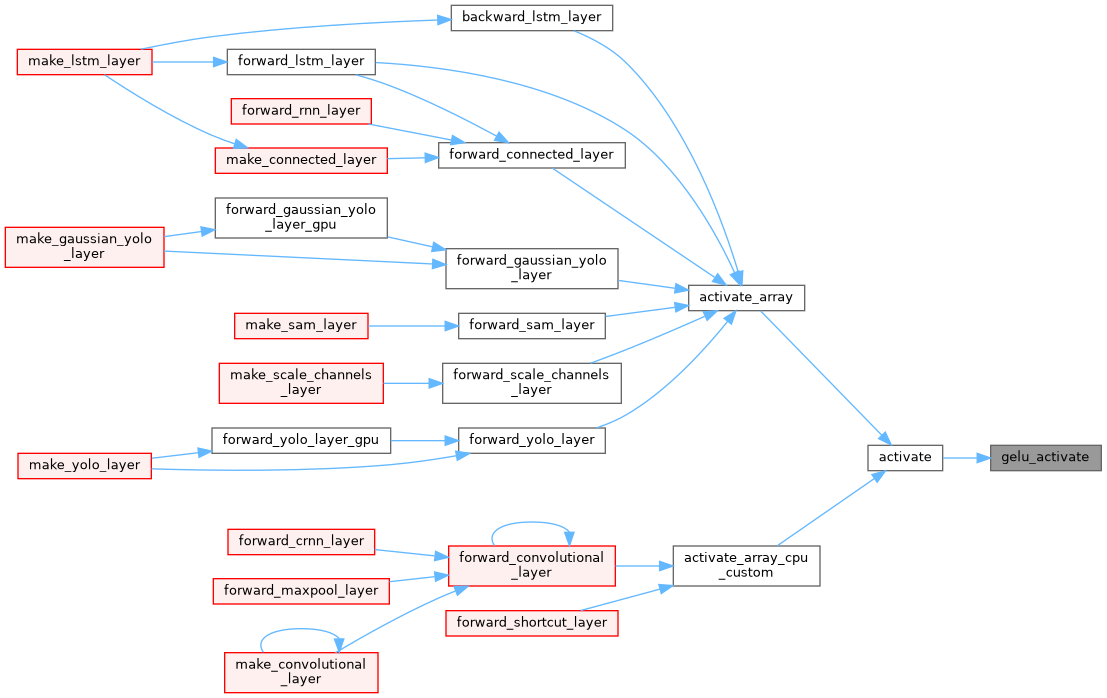

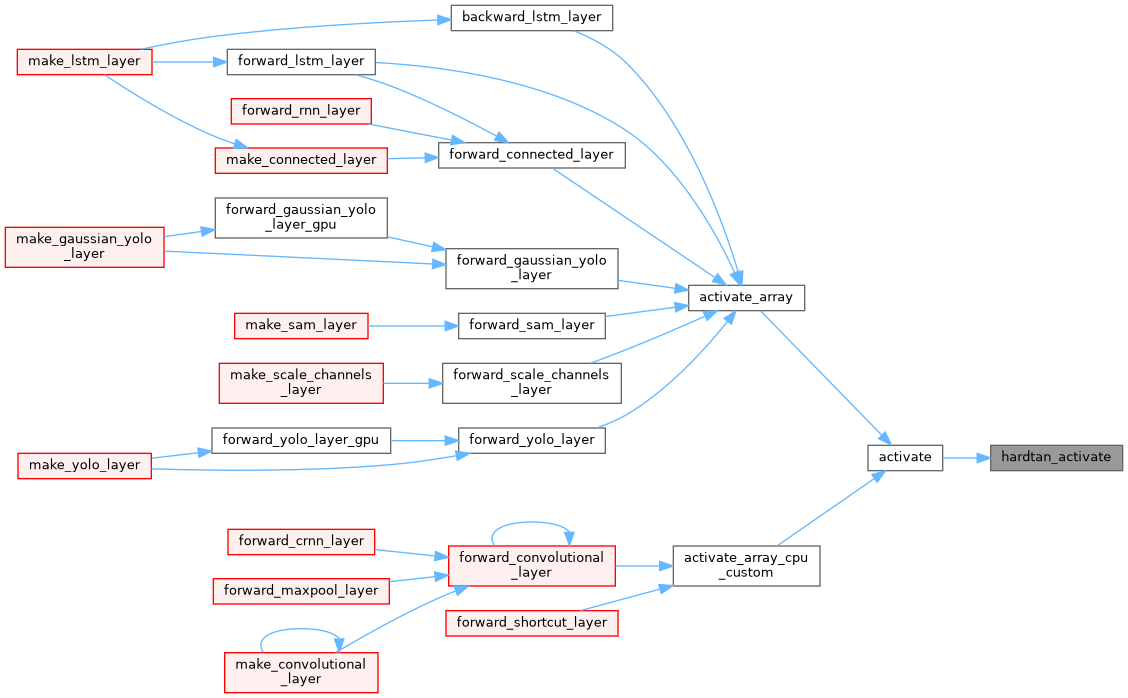

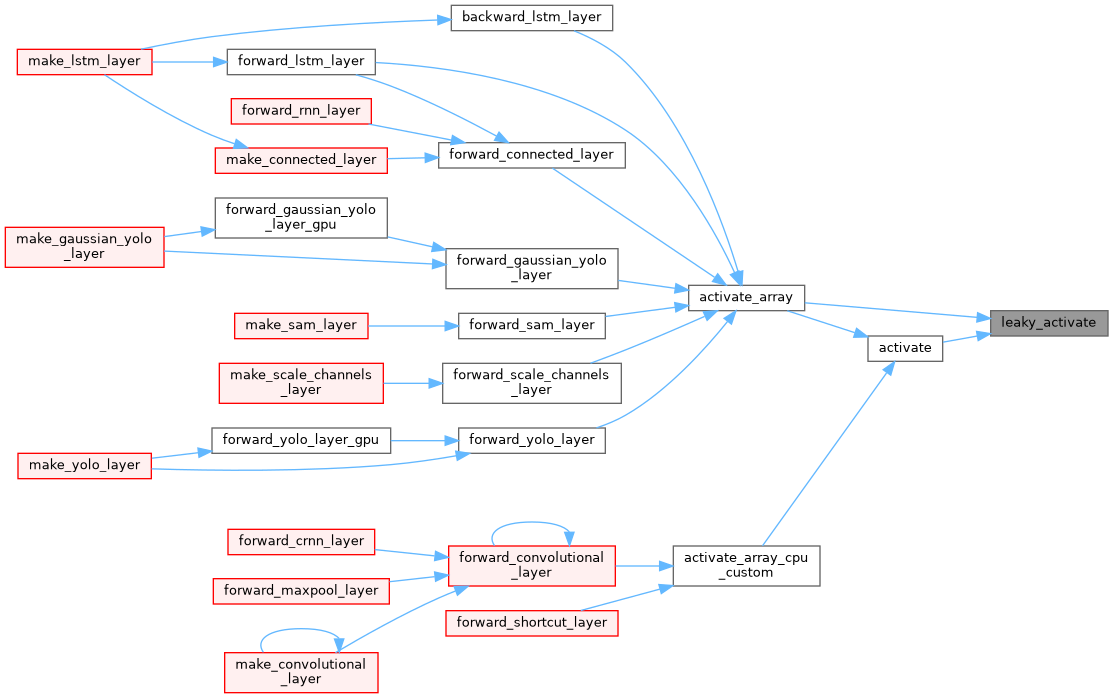

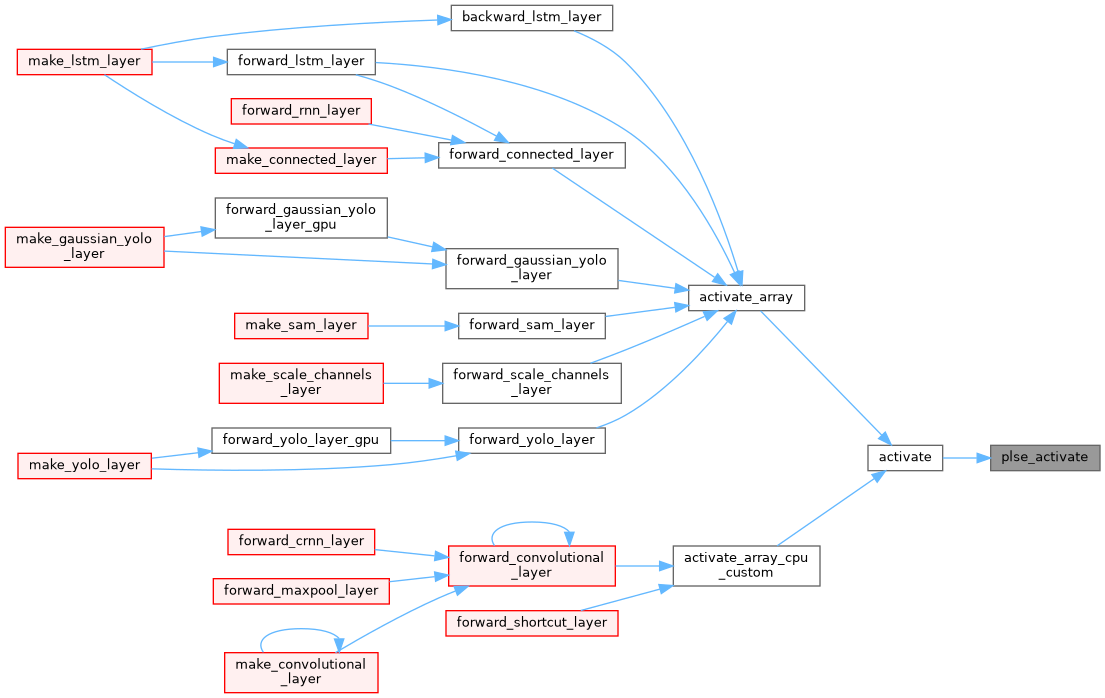

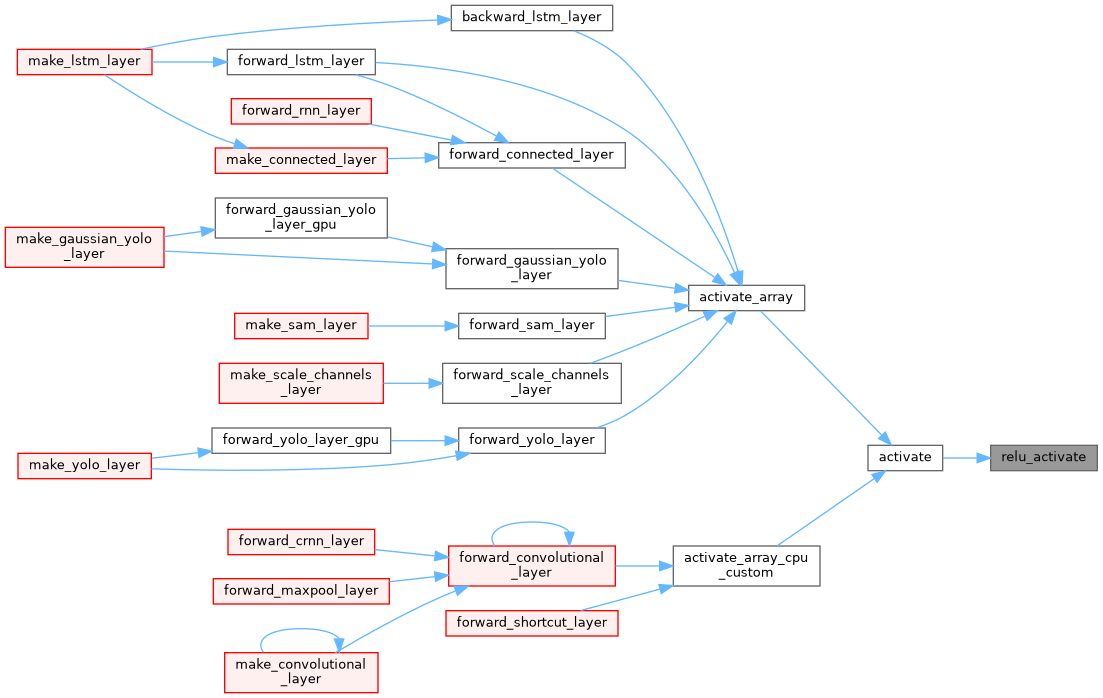

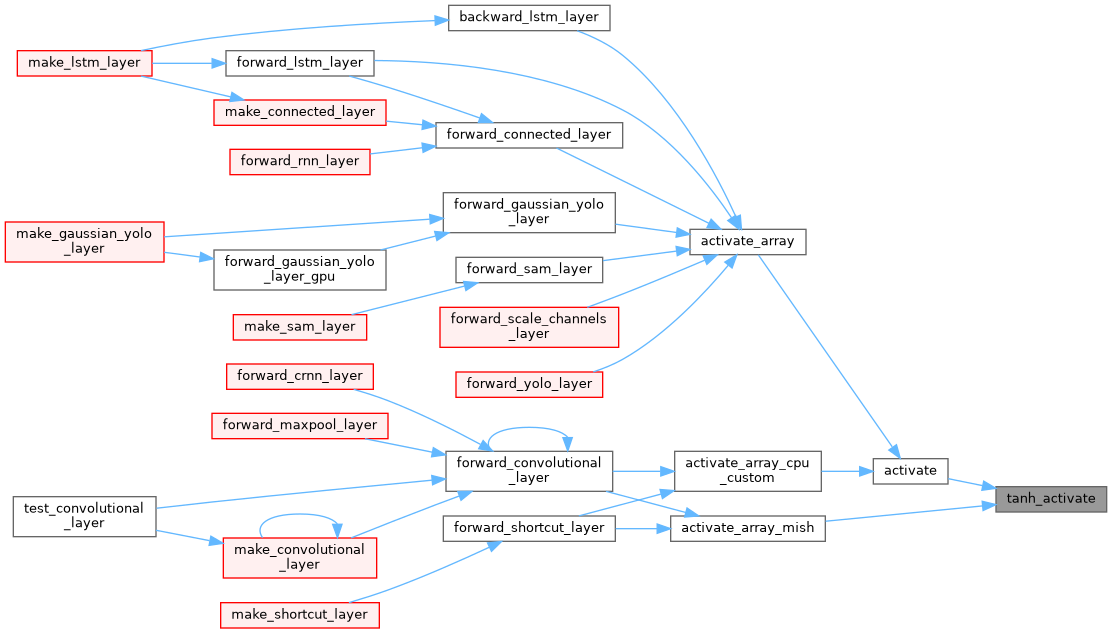

| void | activate_array (float *x, const int n, const ACTIVATION a) |

| |

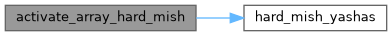

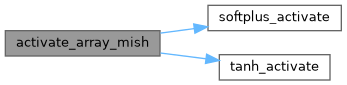

| void | activate_array_hard_mish (float *x, const int n, float *activation_input, float *output) |

| |

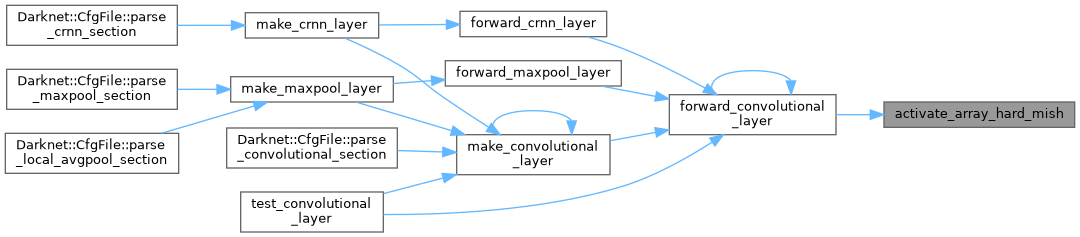

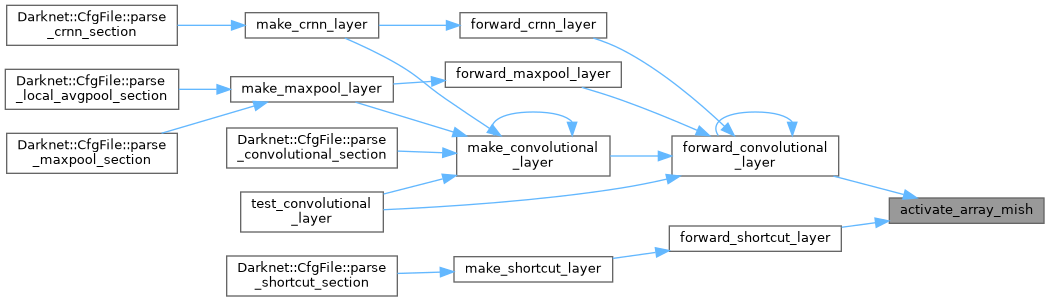

| void | activate_array_mish (float *x, const int n, float *activation_input, float *output) |

| |

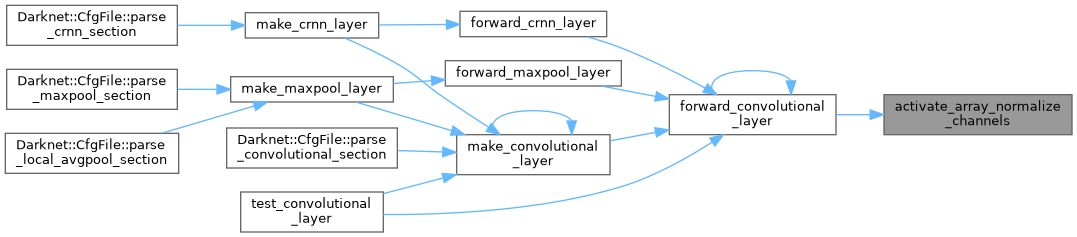

| void | activate_array_normalize_channels (float *x, const int n, int batch, int channels, int wh_step, float *output) |

| |

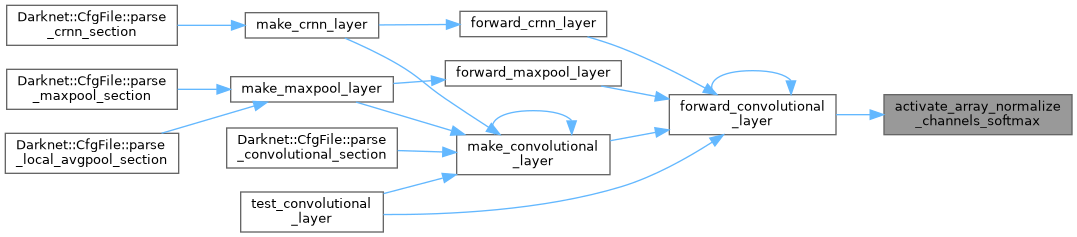

| void | activate_array_normalize_channels_softmax (float *x, const int n, int batch, int channels, int wh_step, float *output, int use_max_val) |

| |

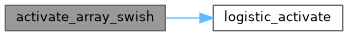

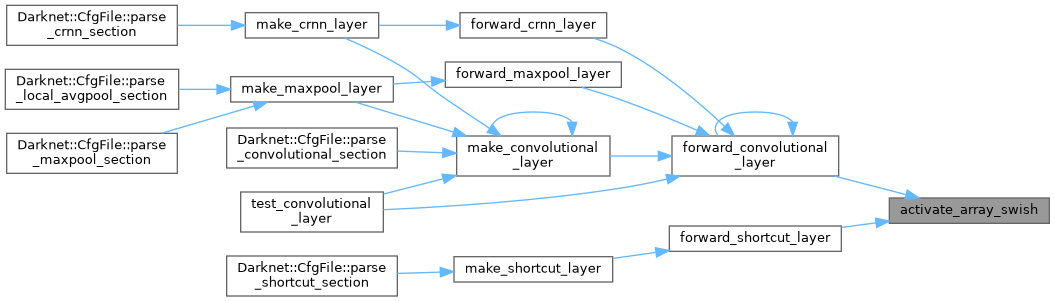

| void | activate_array_swish (float *x, const int n, float *output_sigmoid, float *output) |

| |

| static float | elu_activate (float x) |

| |

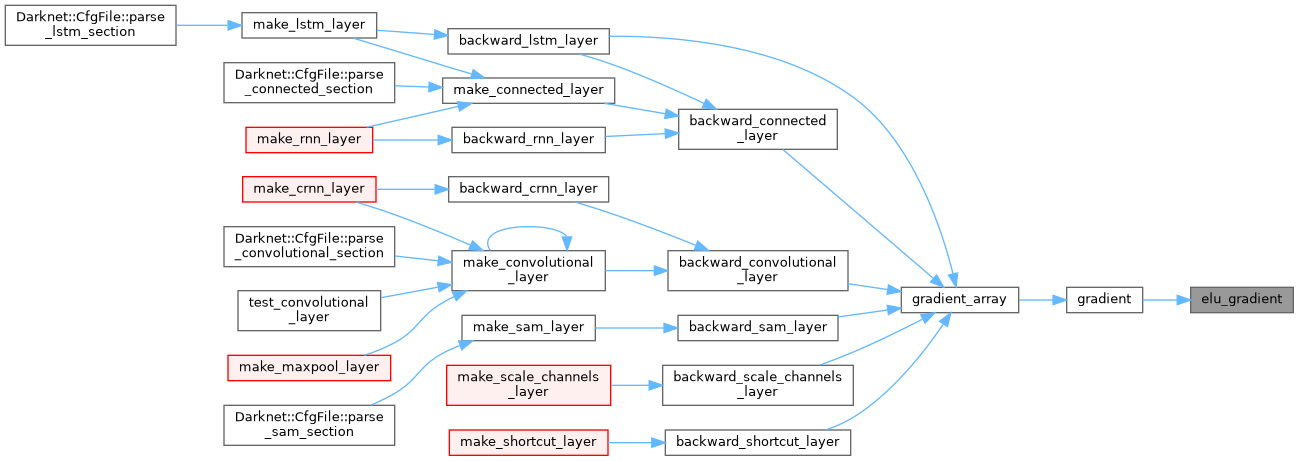

| static float | elu_gradient (float x) |

| |

| static float | gelu_activate (float x) |

| |

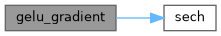

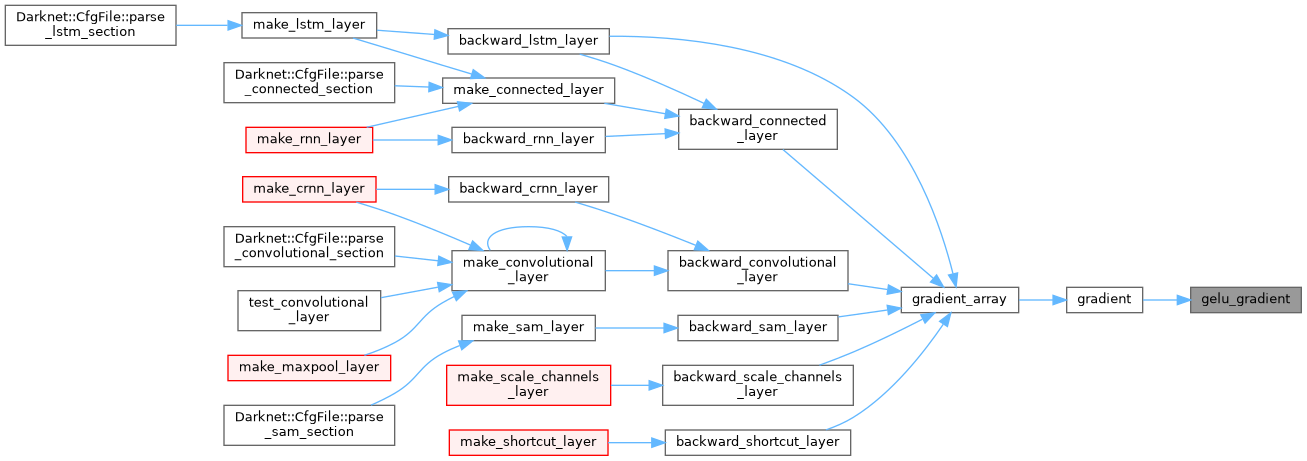

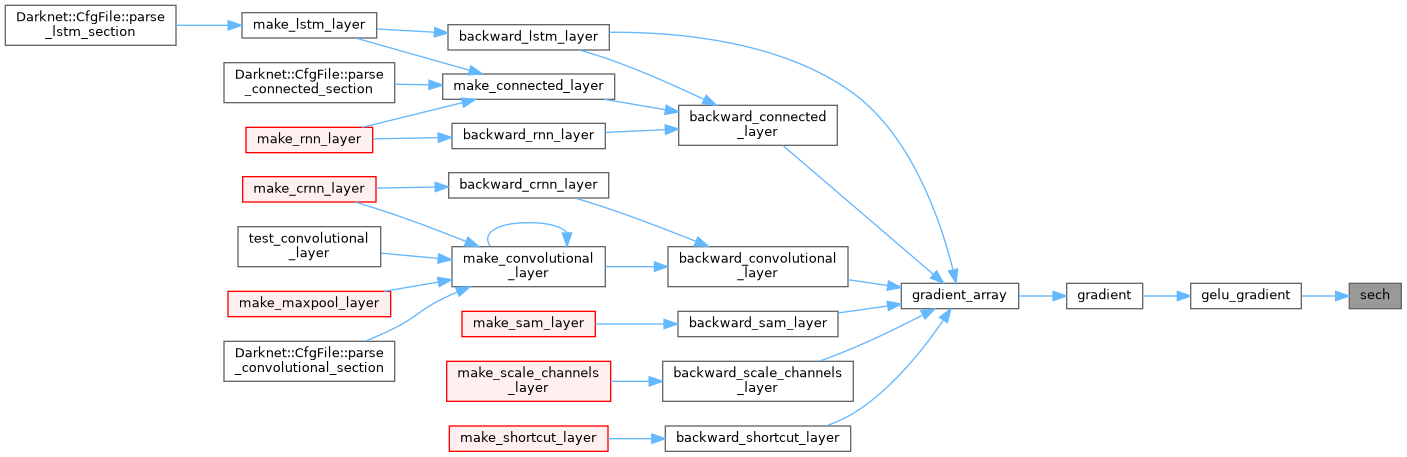

| static float | gelu_gradient (float x) |

| |

| ACTIVATION | get_activation (char *s) |

| |

| const char * | get_activation_string (ACTIVATION a) |

| |

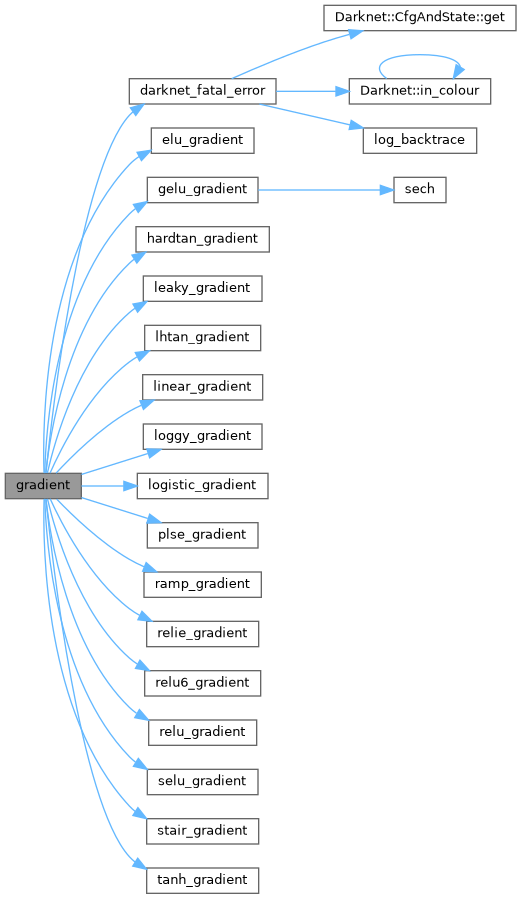

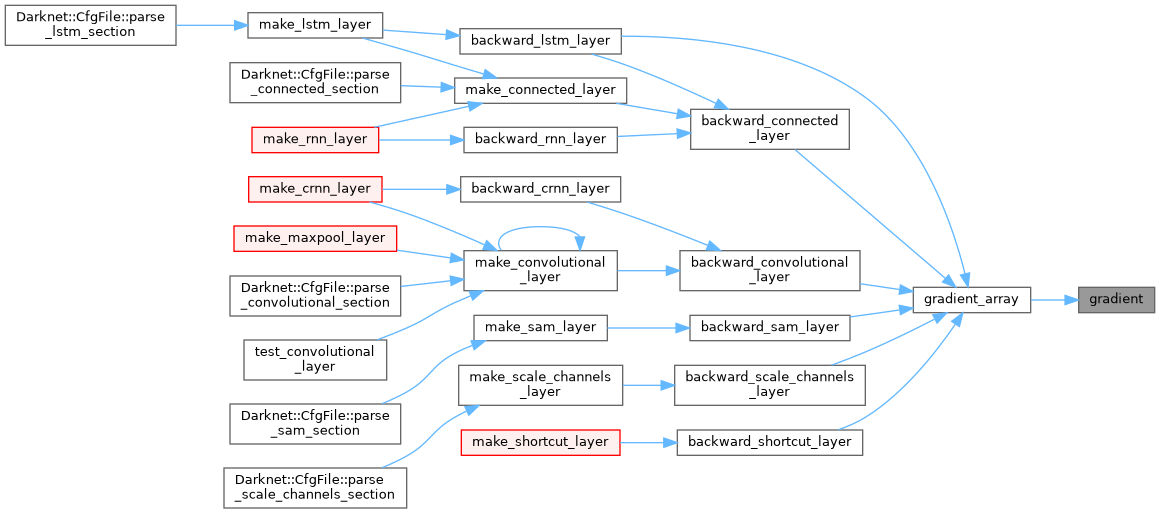

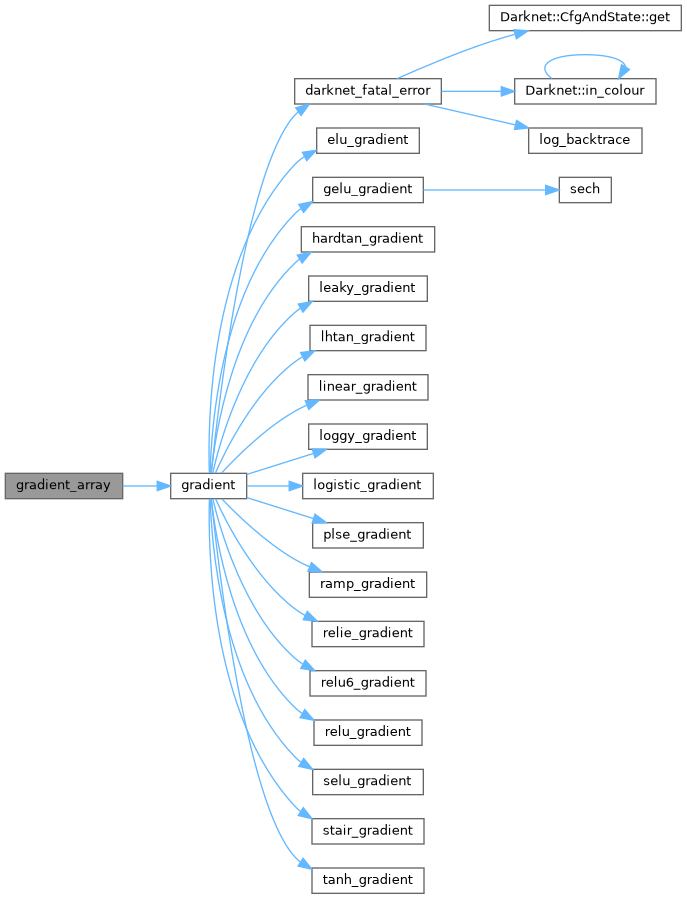

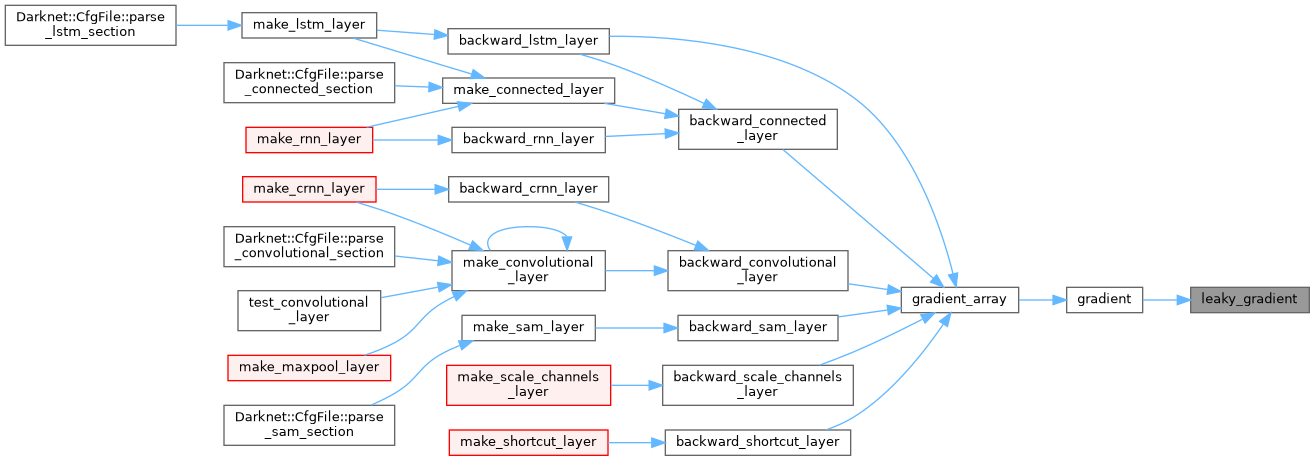

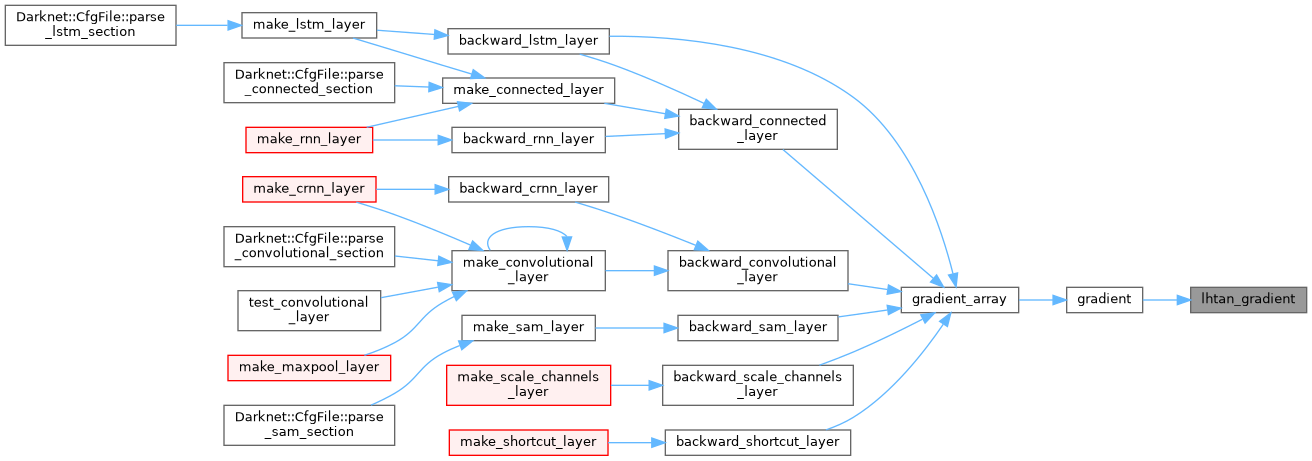

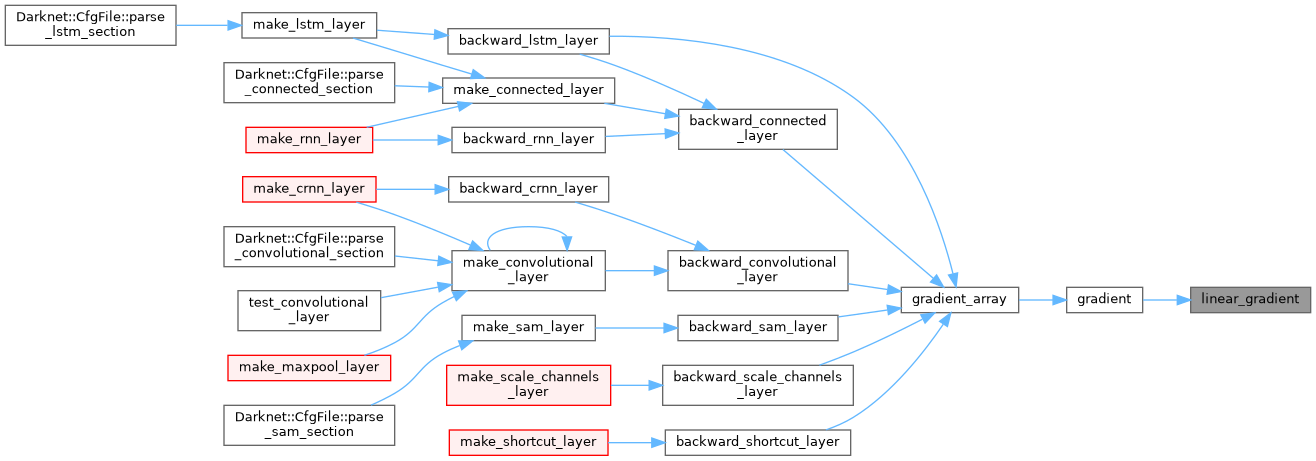

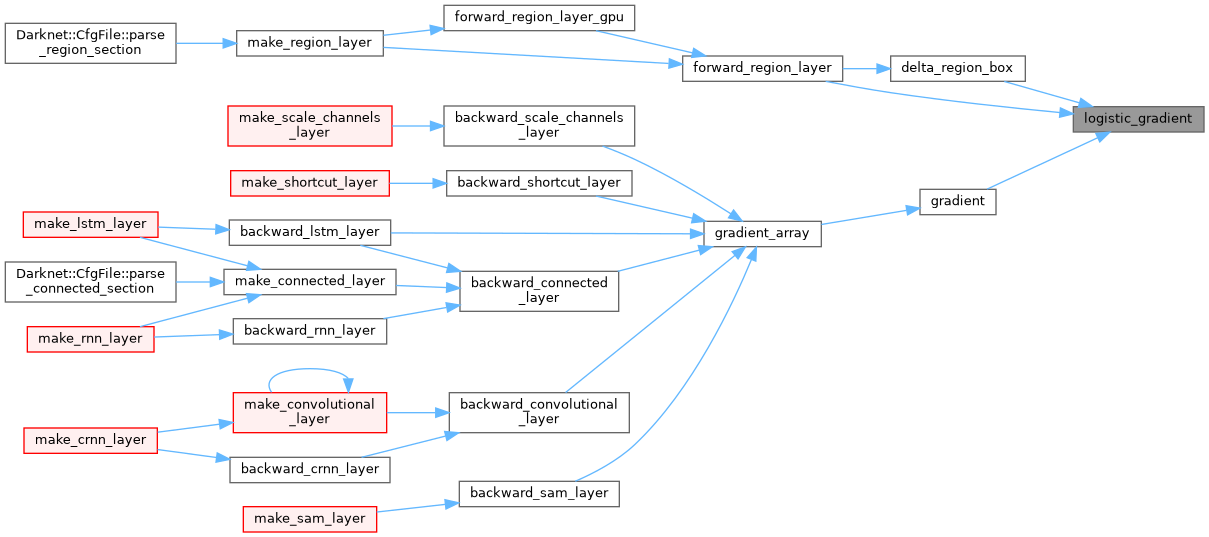

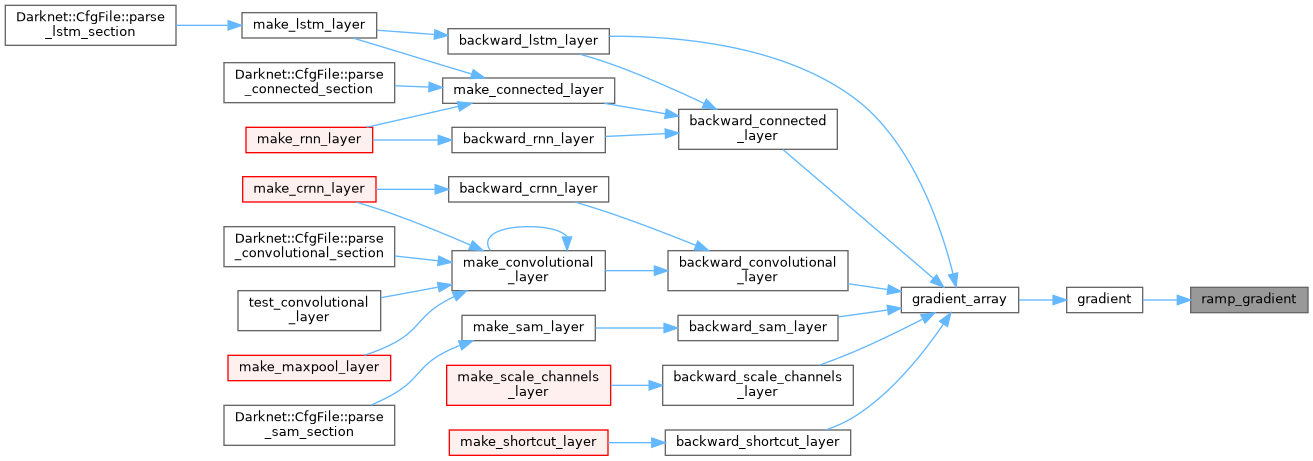

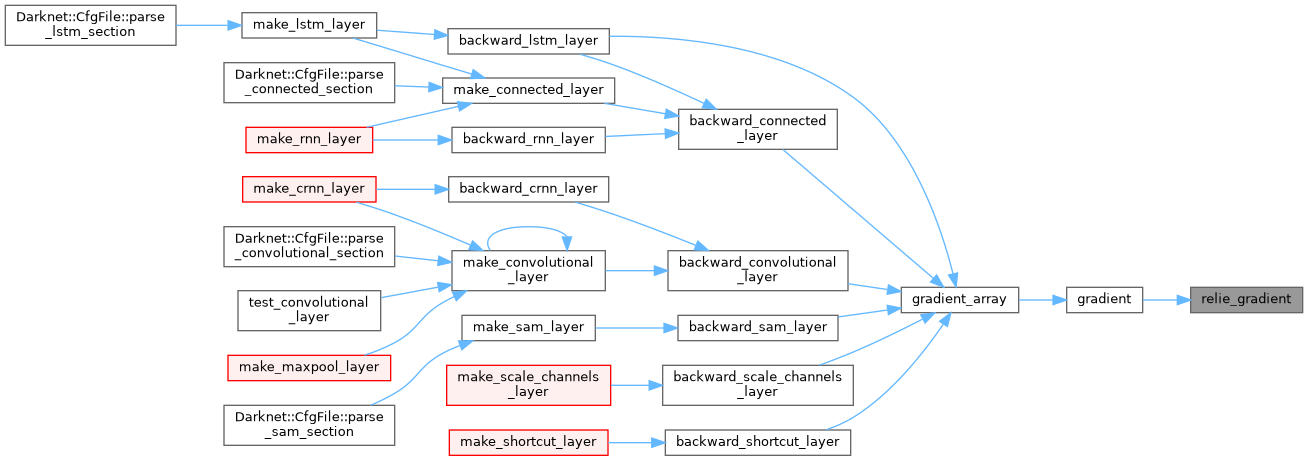

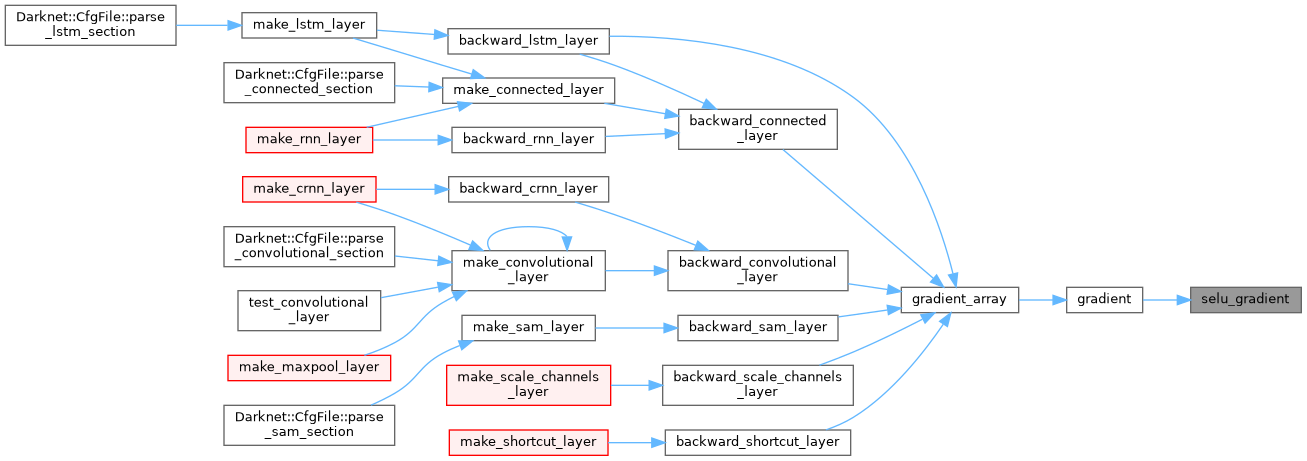

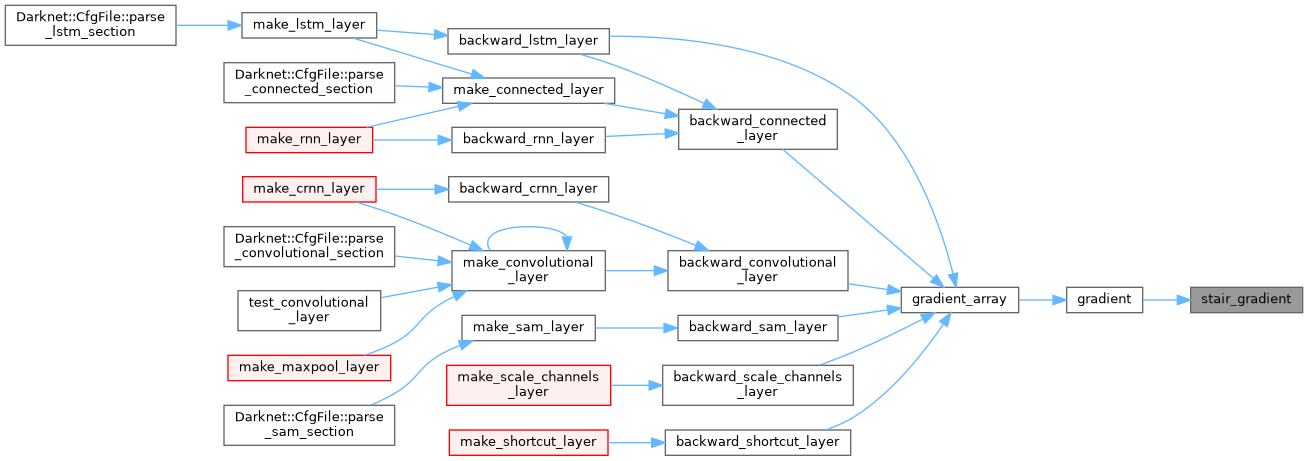

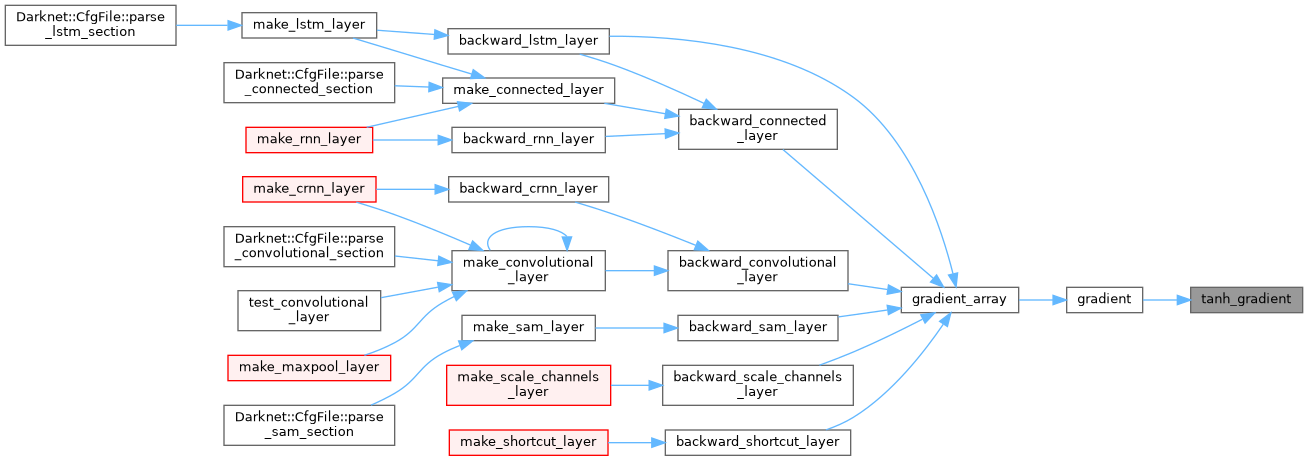

| float | gradient (float x, ACTIVATION a) |

| |

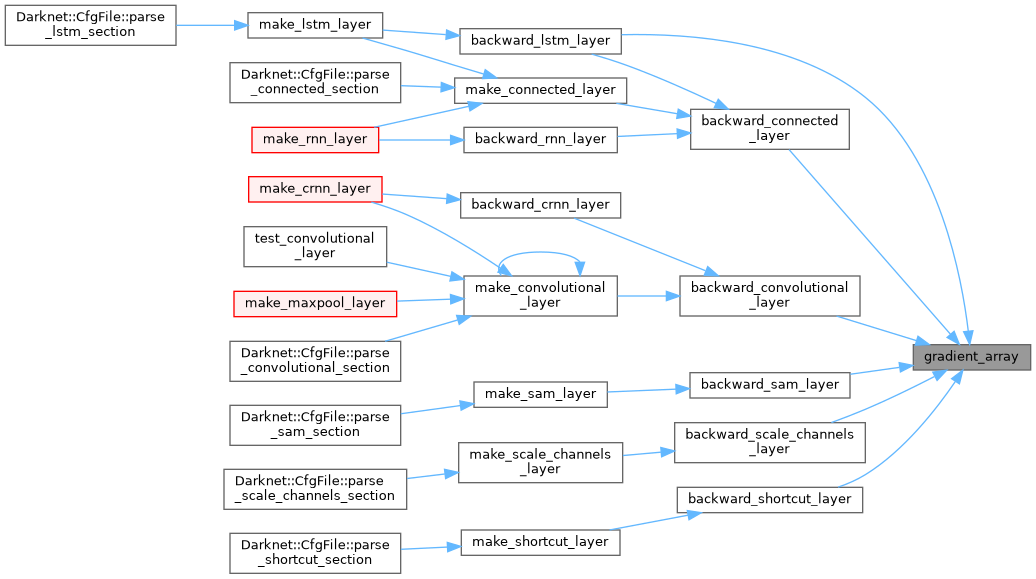

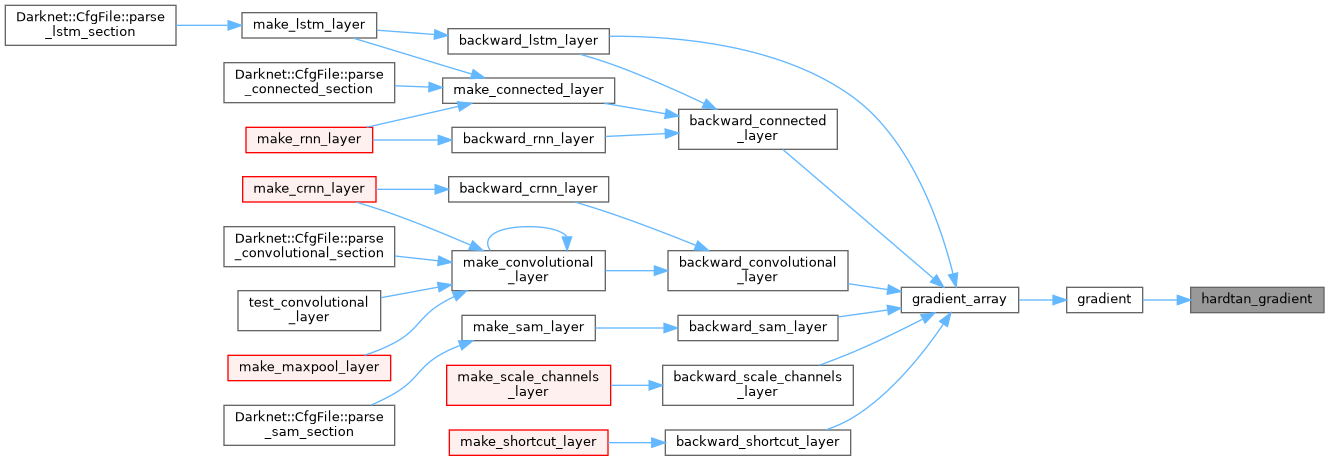

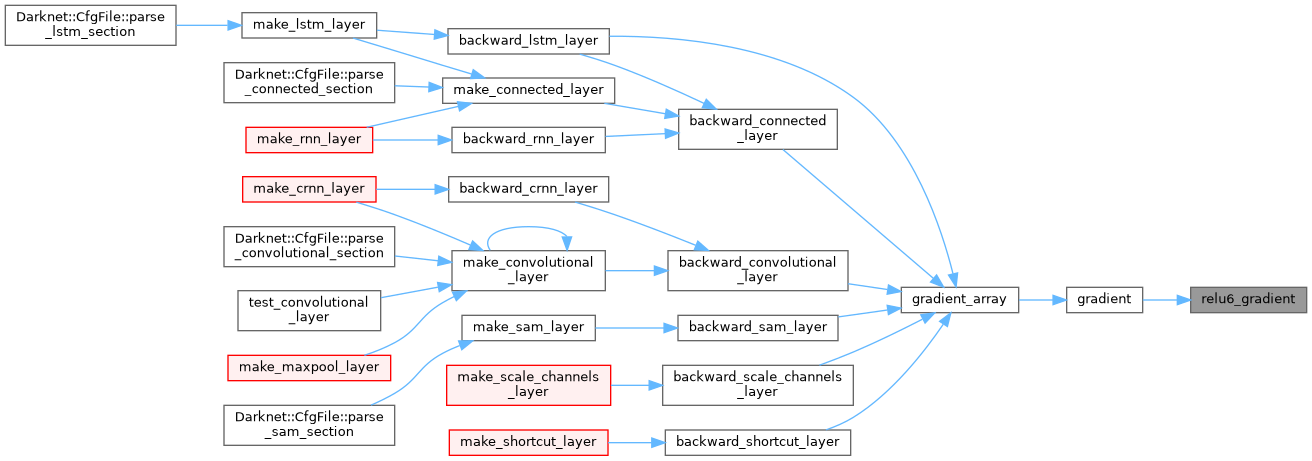

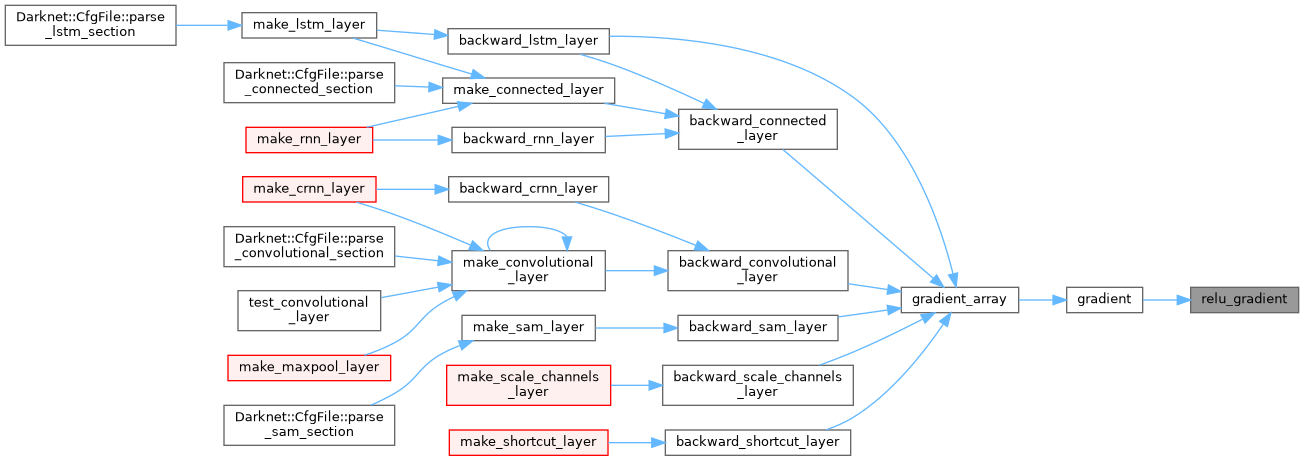

| void | gradient_array (const float *x, const int n, const ACTIVATION a, float *delta) |

| |

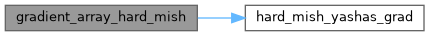

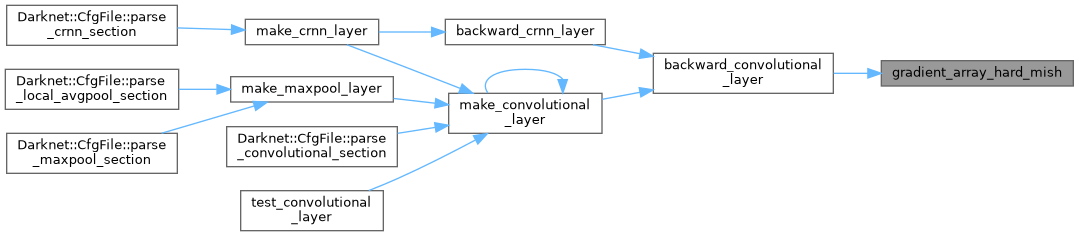

| void | gradient_array_hard_mish (const int n, const float *activation_input, float *delta) |

| |

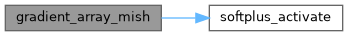

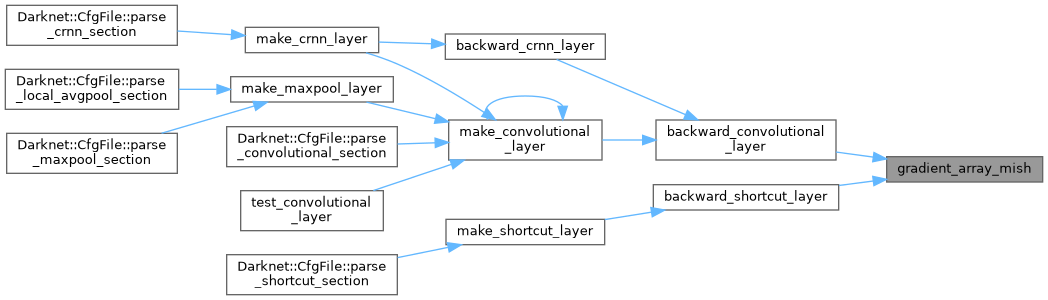

| void | gradient_array_mish (const int n, const float *activation_input, float *delta) |

| |

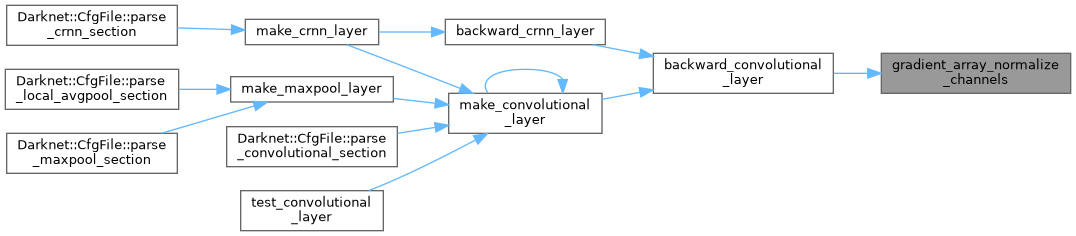

| void | gradient_array_normalize_channels (float *x, const int n, int batch, int channels, int wh_step, float *delta) |

| |

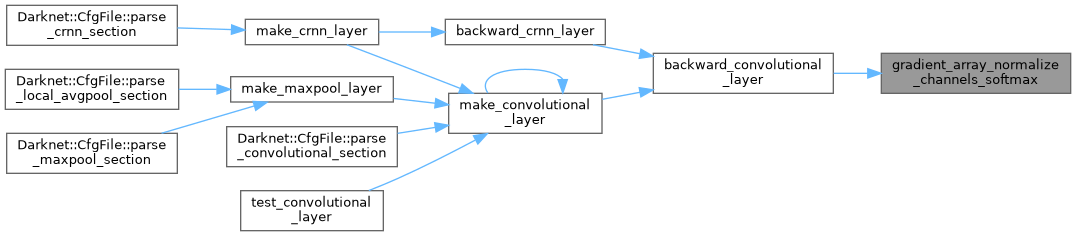

| void | gradient_array_normalize_channels_softmax (float *x, const int n, int batch, int channels, int wh_step, float *delta) |

| |

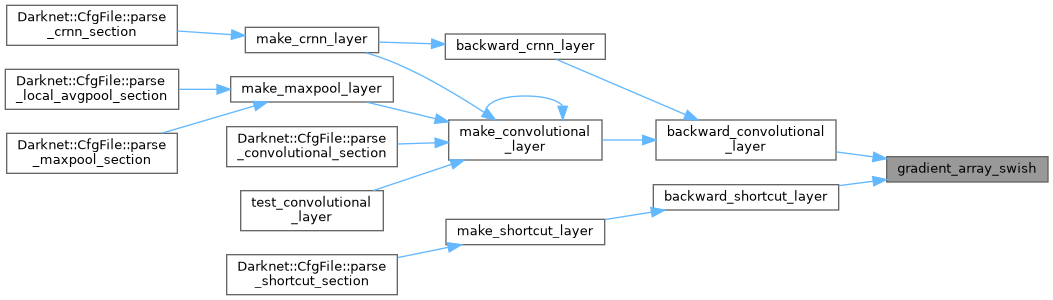

| void | gradient_array_swish (const float *x, const int n, const float *sigmoid, float *delta) |

| |

| static float | hardtan_activate (float x) |

| |

| static float | hardtan_gradient (float x) |

| |

| static float | leaky_activate (float x) |

| |

| static float | leaky_gradient (float x) |

| |

| static float | lhtan_activate (float x) |

| |

| static float | lhtan_gradient (float x) |

| |

| static float | linear_activate (float x) |

| |

| static float | linear_gradient (float x) |

| |

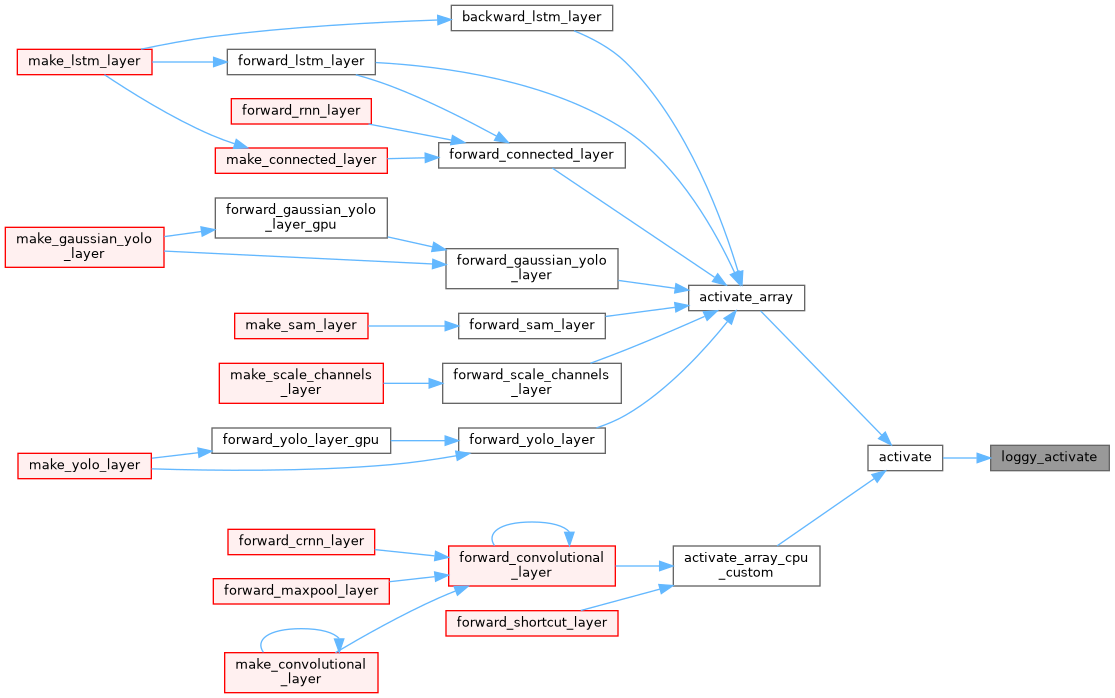

| static float | loggy_activate (float x) |

| |

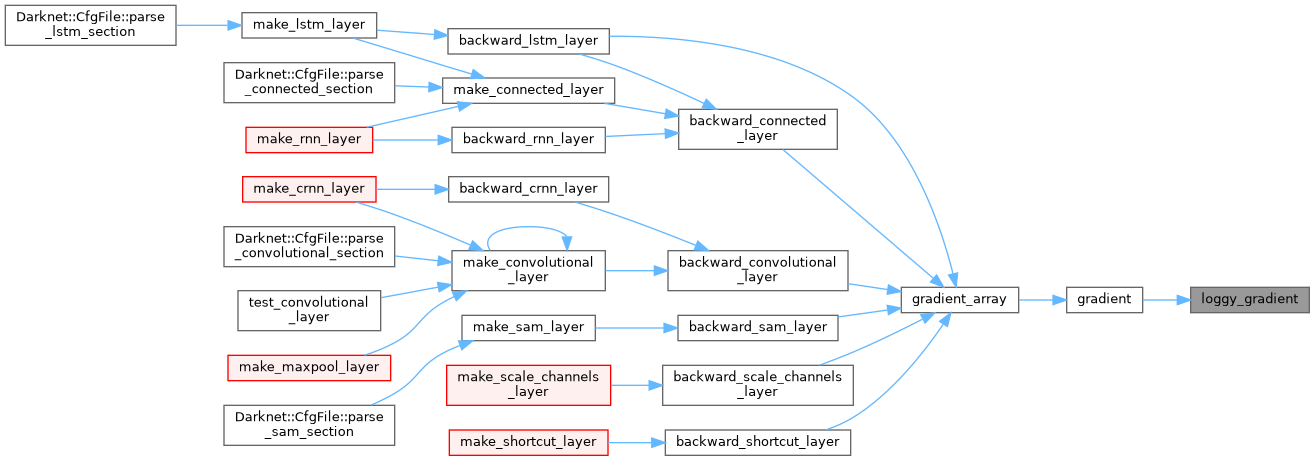

| static float | loggy_gradient (float x) |

| |

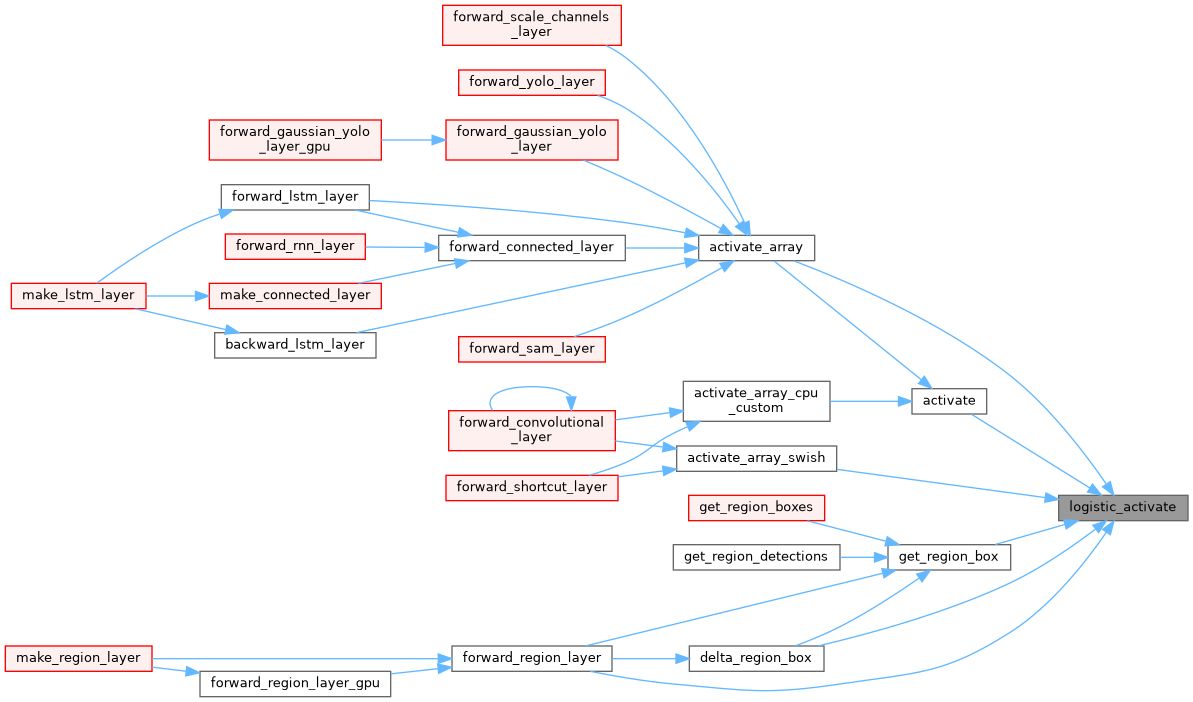

| static float | logistic_activate (float x) |

| |

| static float | logistic_gradient (float x) |

| |

| static float | plse_activate (float x) |

| |

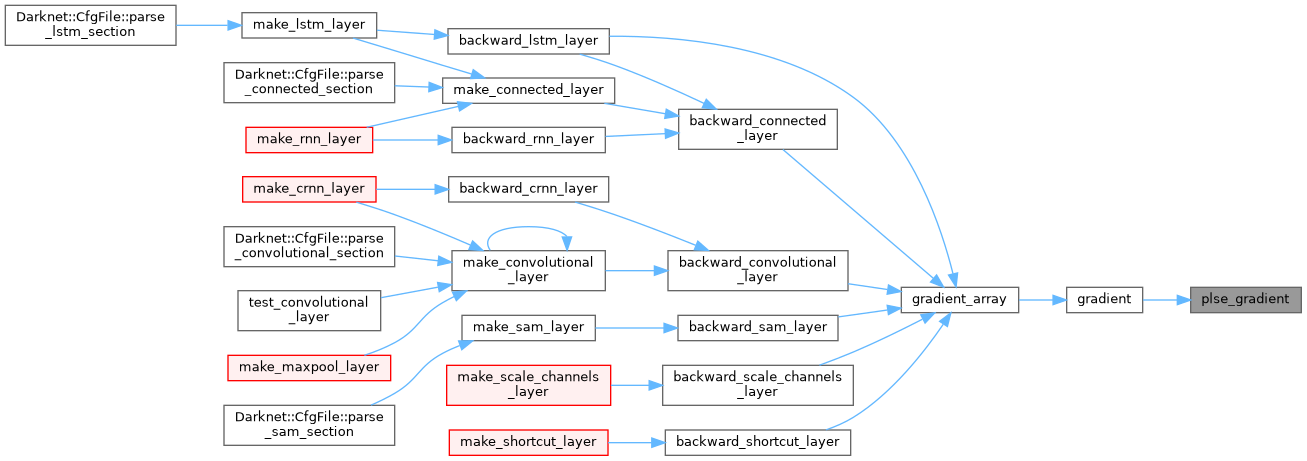

| static float | plse_gradient (float x) |

| |

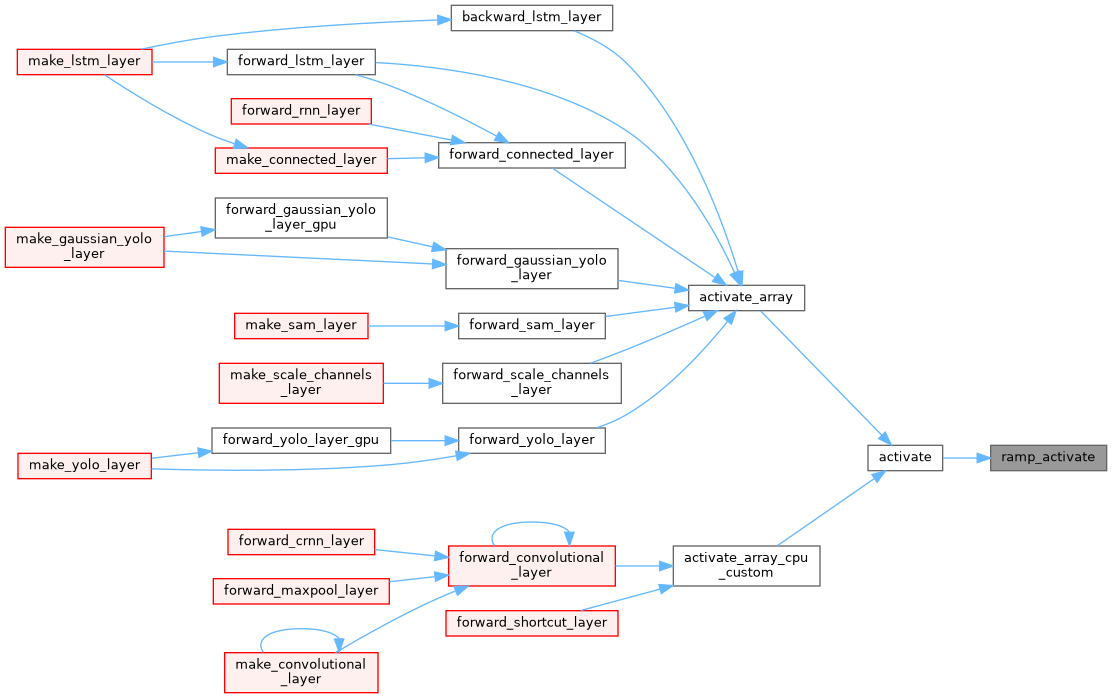

| static float | ramp_activate (float x) |

| |

| static float | ramp_gradient (float x) |

| |

| static float | relie_activate (float x) |

| |

| static float | relie_gradient (float x) |

| |

| static float | relu6_activate (float x) |

| |

| static float | relu6_gradient (float x) |

| |

| static float | relu_activate (float x) |

| |

| static float | relu_gradient (float x) |

| |

| static float | sech (float x) |

| |

| static float | selu_activate (float x) |

| |

| static float | selu_gradient (float x) |

| |

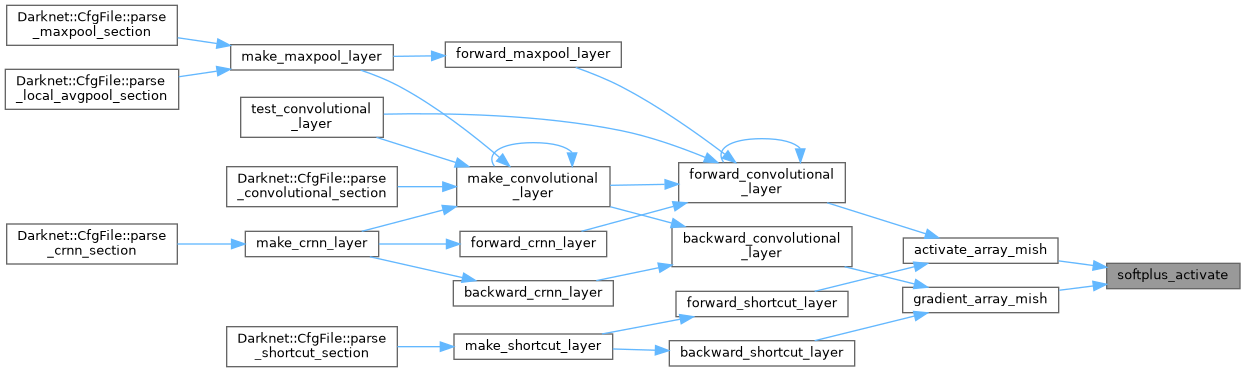

| static float | softplus_activate (float x, float threshold) |

| |

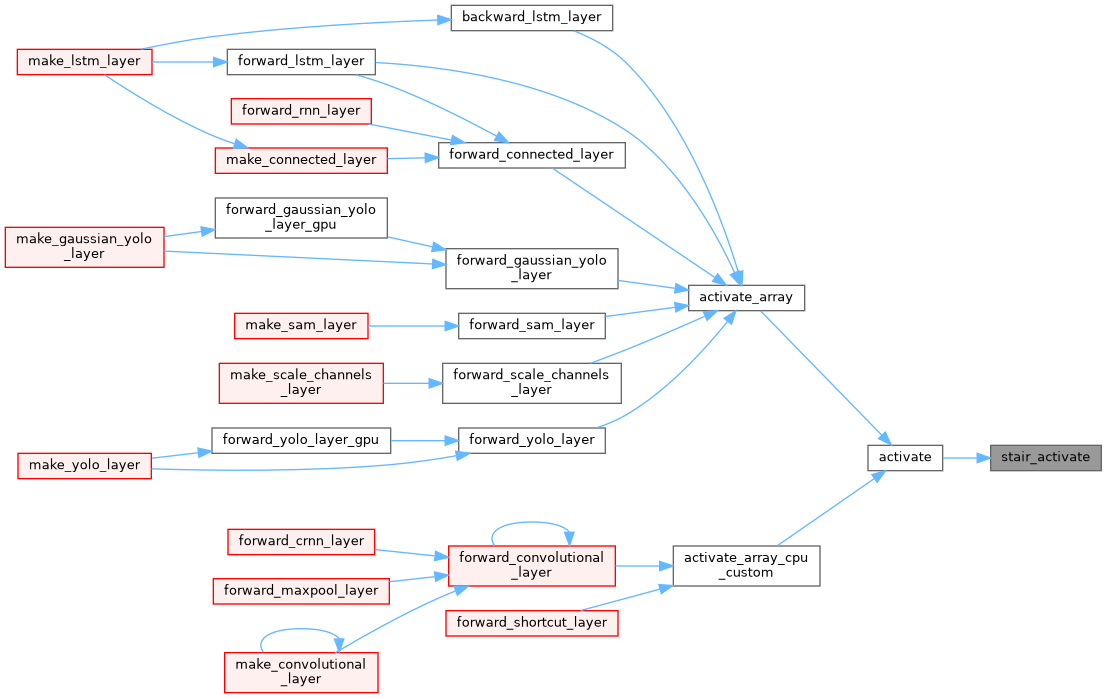

| static float | stair_activate (float x) |

| |

| static float | stair_gradient (float x) |

| |

| static float | tanh_activate (float x) |

| |

| static float | tanh_gradient (float x) |

| |